A Google artificial intelligence called AlphaGo beat a human grand master at the demanding game of Go – a game of profound complexity which originated in China over 2,500 years ago. The game is much tougher than chess for computers because of the number of ways a match can play out.

According to Google’s DeepMind Division, the artificial intelligence beat its human opponent, seen as a grand master, five games to nil.

One independent expert was stunned, and called it a major breakthrough for artificial intelligence (AI) “potentially with far-reaching consequences.”

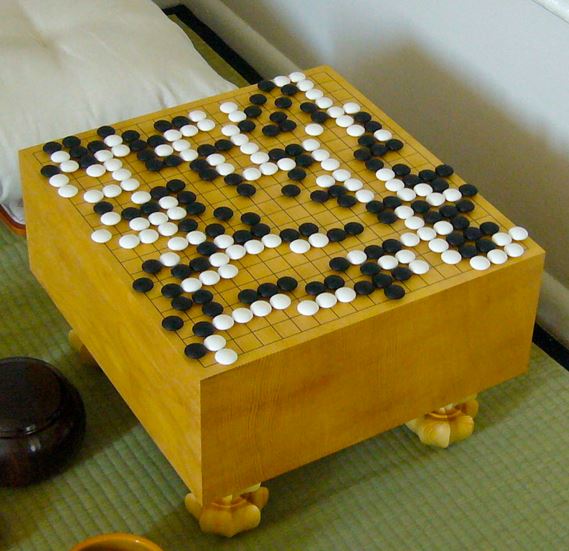

The game ‘Go’ originated in ancient China over 2,500 years ago, and is the oldest board game still played today. For an AI, it is considerably harder to play than chess because there are 10170 ways things can play out. (Image: Wikipedia)

The game ‘Go’ originated in ancient China over 2,500 years ago, and is the oldest board game still played today. For an AI, it is considerably harder to play than chess because there are 10170 ways things can play out. (Image: Wikipedia)

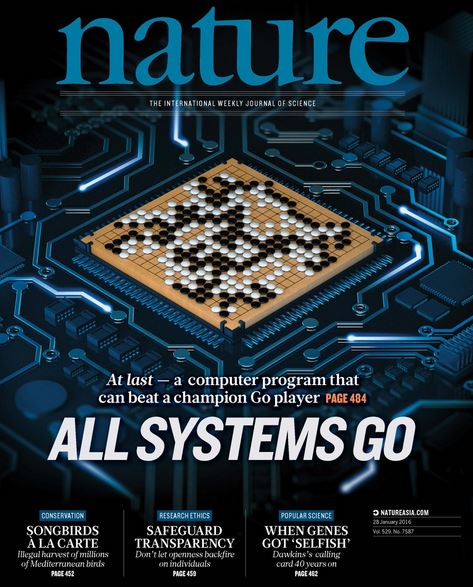

AlphaGo’s key milestone was announced to coincide with the publication detailing the techniques used in the academic journal Nature (citation below).

A game that requires intuition and feel

Confucius (551 – 479 BC), the famous a Chinese teacher, editor, politician, and philosopher, wrote about the game. It is considered one of the four essential arts required by any true scholar in China.

Played by over forty million people globally, the rules of Go are straightforward. You and your opponent take turns to place black or white stones on a board. Your aim is to try to capture your opponent’s stones or surround each empty space to make points of territory.

Go is played primarily through feel and intuition, and because of its subtlety, intellectual depth and beauty, it has captured the imagination of many for hundreds of years.

Even though the rules are straightforward, it is a game of profound complexity. There are 10170 possible positions – there aren’t that many atoms in the whole Universe!

It is this complexity that researchers thought would make it ultra-hard for a computer to play the game Go, and therefore an irresistible challenge to a sophisticated AI like AlphaGo. AI’s use games as field studies or testing grounds to invent smart, flexible algorithms that can address problems, often in ways similar to what we do.

Confucius said “Gentlemen should not waste their time on trivial games – they should play Go.” (Image: biography.com)

Confucius said “Gentlemen should not waste their time on trivial games – they should play Go.” (Image: biography.com)

From Tic-Tac-Toe to conquering Go

In 1952, the game tic-tac-toe (noughts & crosses) was mastered by a computer. The first time a machine ever conquered a game. This was followed by checkers (UK: draughts). In 1997, grand master Garry Kasparov was beaten at chess.

AI’s have excelled not only at games. In 2011, IBM’s Watson beat two champions at Jeopardy. In 2014, Google’s own algorithms learned to play several Atari games just from raw pixel inputs.

However, what this AI has achieved with the game Go is seen by researchers as a mega-huge jump forward.

There is no way traditional AI methods – which build a search tree over all the possible alternatives – would be able to play this game.

Demis Hassabis tweeted that Google’s artificial Intelligence AlphaGo made front cover of Nature. (Image: pbs.twimg.com)

Demis Hassabis tweeted that Google’s artificial Intelligence AlphaGo made front cover of Nature. (Image: pbs.twimg.com)

A new approach used to create AlphaGo

Demis Hassabis, an artificial intelligence researcher, neuroscientist, computer game designer, and world-class gamer, who works at Google DeepMind, wrote:

“So when we set out to crack Go, we took a different approach. We built a system, AlphaGo, that combines an advanced tree search with deep neural networks.”

“These neural networks take a description of the Go board as an input and process it through 12 different network layers containing millions of neuron-like connections. One neural network, the ‘policy network,’ selects the next move to play. The other neural network, the “value network,” predicts the winner of the game.”

Dr. Hassabis and colleagues trained the neural networks on thirty million moves from games that had been played by grand masters, until it could predict the human move 57% of the time – its previous record had been 44%.

However, their aim was to beat human grand masters, not just imitate them. To do this, AlphaGo learned to discover new strategies on its own, by literally playing thousands of games between its neural networks, and tweaking the connections using a trial-and-error process – this is called ‘reinforcement learning’.

To do all this, a massive amount of computing power was required, so the team made extensive use of Google Cloud Platform.

From beating programmes to a grand master

After all that intensive training, AlphaGo was put to the test. They started with a tournament between AlphaGo and the other top programmes at the forefront of computer Go. AlphaGo played 500 games and won 499 of them against these programmes.

The next step was to play against a grand master – that was when Fan Hui, the reigning three-times European Go champion who had been playing professionally since the age of 12, was asked to come in. He came to Google’s London office for a challenge match.

They played last October, and AlphaGo won by 5 games to 0. History was made – never before had a computer beaten a professional Go player.

Citation: “Mastering the game of Go with deep neural networks and tree search,” Demis Hassabis, David Silver, Aja Huang, Thore Graepel, Chris J. Maddison, Arthur Guez, Laurent Sifre, Madeleine Leach, George van den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Timothy Lillicrap, Veda Panneershelvam, Marc Lanctot, Sander Dieleman, Dominik Grewe, Ilya Sutskever, John Nham, Nal Kalchbrenner, & Koray Kavukcuoglu. Nature 529, 484–489. 27 January 2016. DOI:10.1038/nature16961.