There will soon be a Photo App that makes pics more memorable, by predicting better than we can how forgettable or not an image is likely to be and make suggestions on how to improve it, say scientists at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). They envisage several potential uses for their algorithm, such as improving advert content.

MenNet

The new Photo App has not yet been created. Lead author, graduate student Aditya Khosla and colleagues first created an algorithm – called ‘MemNet’ – that they say is able to foretell how memorable a photograph will be. They now plan to turn it into an app that subtly tweaks the pic to make it more memorable.

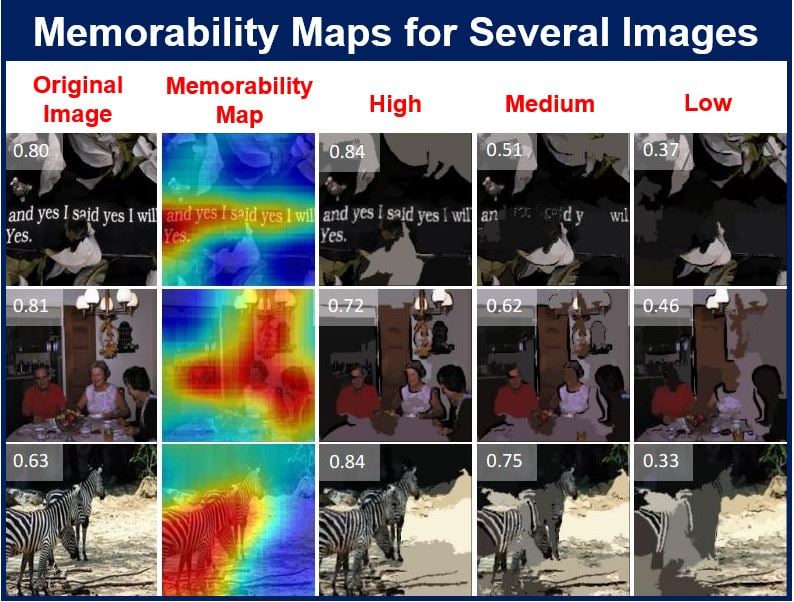

For each photo, the algorithm also creates a heat map that detects exactly which parts of that image are the most memorable. You can upload your own photos online and see how it works.

The memorability maps are shown in the jet colour scheme, ranging from blue to red (lowest to highest). The memorability maps are independently normalized to lie from 0 to 1. The numbers in white are the memorability scores of each image. (Image: people.csail.mit.edu)

The memorability maps are shown in the jet colour scheme, ranging from blue to red (lowest to highest). The memorability maps are independently normalized to lie from 0 to 1. The numbers in white are the memorability scores of each image. (Image: people.csail.mit.edu)

Mr. Khosla said:

“Understanding memorability can help us make systems to capture the most important information, or, conversely, to store information that humans will most likely forget. It’s like having an instant focus group that tells you how likely it is that someone will remember a visual message.”

Many uses for algorithm possible

The researchers say they can envisage a range of potential applications for the algorithm, from improving ad content, social media posts, developing more effective teaching materials, to creating one’s own personal ‘health assistant’ device that helps people remember things.

As part of the project, the scientists also published the largest image-memorability dataset in the world – ‘LaMem’. Its database has 60,000 images, each annotated with detailed metadata regarding qualities such as emotional impact and popularity.

LaMem to trigger further studies

The team says LaMem is their effort to spur further studies on what they say has been an under-studied area of computer vision.

The paper (citation below) was co-written by Akhil Raju, also a graduate student at CSAIL, Prof. Antonio Torralba, who works at CSAIL, and principal research scientist Aude Oliva, Associate Professor of Cognitive Neuroscience at MIT, the project’s senior investigator.

Mr. Khosla presented the paper at the International Conference on Computer Vision, at the Convention Center in Santiago, Chile.

Aditya Khosla is a 5th year computer science Ph.D. student at MIT. He works at CSAIL with the Computer Vision Group where he is advised by Professor Antonio Torralba, and frequently collaborates with Professor Aude Oliva.

How does the algorithm work?

The team had previously created a similar algorithm for facial memorability. This new one is different in that it uses techniques from ‘deep learning’, an AI (artificial intelligence) field that uses systems called ‘neural networks’ that teach computers to sift through huge amounts of data to find patterns without any human help – all on their own.

Apple’s Siri, an inbuilt ‘intelligent assistant’ that allows users to utter natural language voice commands in order to operate mobile devices and their apps, Google’s auto-complete, and Facebook’s photo-tagging use such techniques.

Facebook, Google and Apple have spent hundreds of millions of dollars backing deep-learning startups.

Predicting human memory

Prof. Oliva said:

“While deep-learning has propelled much progress in object recognition and scene understanding, predicting human memory has often been viewed as a higher-level cognitive process that computer scientists will never be able to tackle. Well, we can, and we did!”

Neural networks work to correlate information with no human guidance on what the underlying correlations or causes might be. They are organized in processing unit layers, with each one performing random computations on the data in succession. As more data is gathered, the network readjusts to produce more accurate predictions.

The researchers fed the algorithm several tens of thousands of images from many different datasets, including LaMem, and the scene-oriented SUN and Places. Each image had received a ‘memorability score’ based on the ability of human beings to remember them in online experiments.

Algorithm pitted against human subjects

Prof. Oliva and colleagues then set their algorithm against human volunteers by tasking the model to predict how memorable a group of people would find a new never-before-seen photograph.

Its predictions were 30% more accurate than existing algorithms, and were just a few percentage points below the average human performance.

For each picture, the algorithm creates a heat map that shows which parts of the image are most memorable. By highlighting different regions, they can potentially enhance the image’s memorability.

Alexei Efros, an associate professor of computer science at the University of California at Berkeley, said:

“CSAIL (MIT’s Computer Science and Artificial Intelligence Laboratory) researchers have done such manipulations with faces, but I’m impressed that they have been able to extend it to generic images. While you can somewhat easily change the appearance of a face by, say, making it more ‘smiley,’ it is significantly harder to generalize about all image types.”

Looking ahead – updating the system

The study also unexpectedly provided some insight into the nature of human memory. Mr. Hosla says he had wondered whether human volunteers would remember everything if they were shown just the most memorable pictures.

Mr. Khosla said:

“You might expect that people will acclimate and forget as many things as they did before, but our research suggests otherwise. This means that we could potentially improve people’s memory if we present them with memorable images.”

The scientists said they now plan to update the system so that it can predict the memory of a specific individual, as well as to better target it for ‘expert industries’ such as logo and retail clothing design.

Visual data we pay attention to

Prof. Efros said:

“This sort of research gives us a better understanding of the visual information that people pay attention to. For marketers, movie-makers and other content creators, being able to model your mental state as you look at something is an exciting new direction to explore.”

The project was supported by grants from the National Science Foundation, the MIT Big Data Initiative at CSAIL, the McGovern Institute Neurotechnology Program, Xerox and Google research awards, and Nvidia which donated hardware.

Citation

“Understanding and Predicting Image Memorability at a Large Scale,” Aditya Khosla, Akhil S. Raju, Antonio Torralba, and Aude Oliva. International Conference on Computer Vision (ICCV), 2015.

Interesting related article: “Native vs. Hybrid App Development – What’s the Difference?”