At this point, it’s likely that you’ve already heard about the trolley problem, probably the most famous thought experiment in ethics. In case you haven’t, here’s its most general and common form: there’s a trolley moving through the rails towards five people tied to the tracks. You are next to a switch that can redirect the oncoming trolley to a side track, thus saving the five people. However, there’s one person on the sidetrack. You only have two options to choose from:

- Do nothing and let the trolley kill those five people.

- Flick the switch, redirect the trolley and kill one person.

This problem has been introduced decades ago in different forms as a way to put two different schools of moral thought against each other: utilitarianism and deontological ethics. The majority of people say they’d flick the switch if confronted by this situation, thus siding with utilitarians, who believe that using that option would mean the greater good, as fewer people would be harmed.

This problem has been introduced decades ago in different forms as a way to put two different schools of moral thought against each other: utilitarianism and deontological ethics. The majority of people say they’d flick the switch if confronted by this situation, thus siding with utilitarians, who believe that using that option would mean the greater good, as fewer people would be harmed.

However, if you answer that you’d do nothing, no one could say you’d be wrong, as deontologists believe that actively deciding to flick the switch is morally wrong, as you’d be deciding to kill a single person in the sidetrack. Opposed to this vision is the one that says your mere presence in this scenario morally obliges you to participate. Is there a right answer?

Naturally, your first answer will be “yes”, as we are all inclined to think that what we’d do is the right thing. But when you start considering the opposing view and its justifications, things start to get complicated. After all, ethics is a social construct that most of the times has internal contradictions that clash with reality.

The modern trolley

Why would we revisit the trolley problem when talking about robots? The exercise proposed by David Edmonds in this BBC article might help you understand. Imagine that, instead of a trolley, you have to program a self-driving car and instruct it on what to do in a similar situation (hit a group of distracted kids running to cross a street or avoid them and hit an oncoming biker). What do you “teach” it?

Again, you might feel like you have the right answer going with the utilitarian route and you’ll be with the majority in this one too. That’s until you are posed with a new twist. What would you answer if, instead of hitting a biker, the self-driving car swerves in such a way that kills you, the passenger? Interestingly enough, people are not too fond of that idea – and even less of owning a car that’s programmed that way.

So, even when autonomous vehicles might increase the safety of our roads and reduce the number of accidents caused by human errors (the major cause for accidents, mind you), people still aren’t sure, simply because of a moral dilemma that might present itself exceptionally in reality. However, and as exceptional as that scenario might be, self-driving car manufacturers and everyone helping them (from in-house developers and marketers to freelance designers and Latin American outsourcing companies) all have to take this into account and make a decision.

The ultimate question that hides behind this scenario exceeds the world of autonomous vehicles and has a direct impact on all of the intelligent robots out there, from the pilots already in existence to the ones that are being devised as you read this. What kind of ethics should programmers embed in them? How do we teach our complex and somewhat chaotic morals to a robot?

Even if we figure out how to train ethical robots, there’s the thornier question of defining who calls what’s right and what’s wrong. Do we leave the ethics to the government, the manufacturer, the consumer, or to the algorithms that power those robots? The modern trolley problem (the one with the self-driving car) is just the new dress of a very old question: who decides what’s right and what’s wrong?

Should we leave it to AI?

Since the robots of the future aim to be as intelligent as humans, it’s only natural to consider the ethical aspects of robotics. This very challenging task is already under scrutiny by two separate bodies of study that consider the two major aspects that come into play in this discussion.

On one hand, there’s roboethics, which basically tackles everything we’ve pointed out so far. In other words, this is the field that deals with the people that create the machines. In its analysis, roboethics take a look at how we design, build, use, and treat AI, as all of those stages will eventually define how will robots behave and “think.” That’s crucial because all humans have biases, so it’s important to control how much of those biases are transferred to robots – and how do they affect them.

On one hand, there’s roboethics, which basically tackles everything we’ve pointed out so far. In other words, this is the field that deals with the people that create the machines. In its analysis, roboethics take a look at how we design, build, use, and treat AI, as all of those stages will eventually define how will robots behave and “think.” That’s crucial because all humans have biases, so it’s important to control how much of those biases are transferred to robots – and how do they affect them.

The other field of study is machine ethics, which focuses on the robots themselves. Here, the analysis is made on the robots as artificial moral agents (AMAs) who’ll be capable of evaluating complex situations, potential courses of actions, and the implications and consequences all of them could have. By looking into this, there are other possible roads we could take with robot ethics – basically, just leave the whole thing to AI.

This could be possible thanks to machine learning, a powerful subset of artificial intelligence that’s capable of examining large datasets to identify patterns, make decisions according to what it finds, and refine its proceedings the more it’s used. Basically, machine learning is based on algorithms that train themselves over time and “gain experience.”

Some people might feel tempted to leave the ethical question to these algorithms, as it would take the burden of answering them ourselves. Yet, believing that this is easy is only possible if you ignore how machine learning works. To explain it in the simplest way possible, ML algorithms can be programmed with a minimal set of rules from where they can start figuring out the ethical question by themselves.

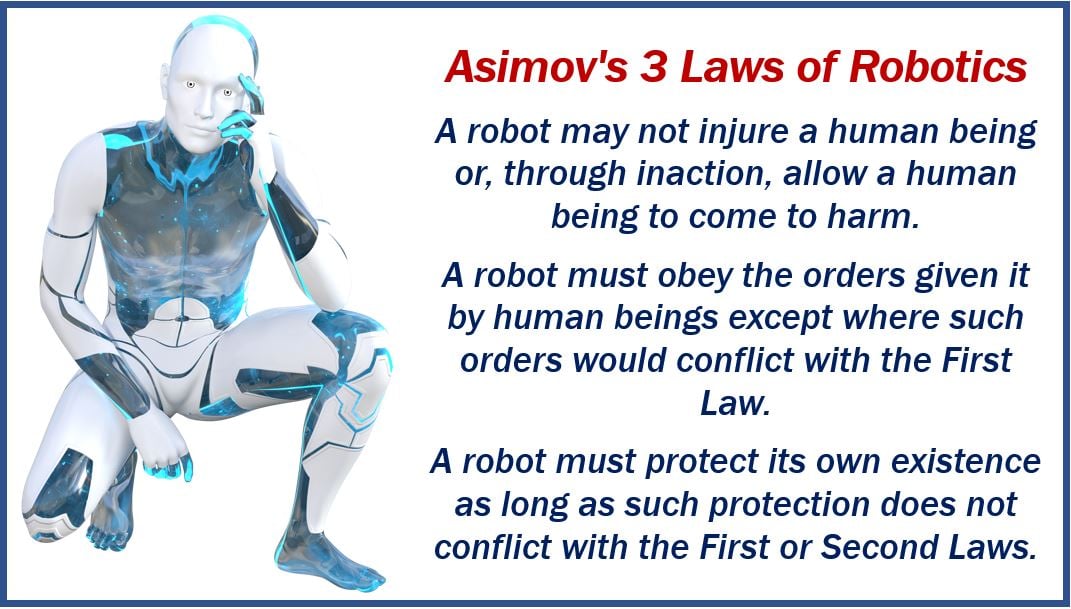

As you can imagine, this set of rules (which can range from “avoid suffering” and “foster happiness” to Asimov’s 3 laws of robotics) have to be designed by someone. In other words, though the machine can examine new scenarios to develop a stronger ethical framework, it has to start to build its understanding from human foundations.

As you can imagine, this set of rules (which can range from “avoid suffering” and “foster happiness” to Asimov’s 3 laws of robotics) have to be designed by someone. In other words, though the machine can examine new scenarios to develop a stronger ethical framework, it has to start to build its understanding from human foundations.

That’s not all. As previous efforts have shown us, ML-based robots can learn somewhat problematic lessons. Microsoft provided us with the best example to illustrate this. Back in 2016, the Redmond giant introduced Tay, a chatbot that was supposed to talk to Twitter users and develop new capabilities with each interaction. With Twitter being Twitter, it took only a couple of hours for Tay to become a racist and a negationist. Even when working as intended (Tay indeed learned new things), what it learned was highly worrisome.

Even if machine learning algorithms go down “the right path”, they might end up evolving in ways we can’t foresee or understand. After a certain level of sophistication, the robots can come up with new approaches we might not have realized or contemplated yet. It’s impossible to think about this possibility and not hearing HAL 9000 from the fantastic 2001: A Space Odyssey saying “I’m sorry, Dave, I’m afraid I can’t do that.”

Robots of the imperfect world

We’d all love to be able to create ethical robots but, given our own human nature and our own limitations to understand ethics, maybe we should be all thinking of a different goal. Rather than trying to create ethical machines, we should be focusing on how we can make ethically committed robots. In other words, we should be pursuing the design of robots that are as ethical as us, machines that will be flawed but that will aim to have the best intentions at heart.

What Amy Rimmer, a Cambridge University Ph.D. and lead engineer on the Jaguar Land Rover autonomous car, says in that BBC article might be a great way to understand this. When asked what we should teach an autonomous car in regards to the trolley problem. Rimmer states that she doesn’t “have to answer that question to pass a driving test, and I’m allowed to drive. So why would we dictate that the car has to have an answer to these unlikely scenarios before we’re allowed to get the benefits from it?”

It’s highly likely that we will end up having sentient robots that will be as intelligent as us. Why shouldn’t we aspire to create robots that are as ethical as us, especially in the same contradictory way? Maybe the development of ML-based robots will lead the way and show us new approaches that will allow us to rethink a lot of our ethical dilemmas, trolley problems included.

Video – Artificial Intelligence

____________________________________________________________

- Interesting article 1: “What is Artificial Intelligence?”

- Interesting article 2: “What is a Robot?”