Introduction to Automation software testing

Automated software testing is a procedure by which a developing software is checked and verified for errors and bugs with the use of testing software. It covers all types of testing phases and includes cross-platform compatibility. In general, all tests that are performed can be automated.

Automation testing improves manual testing efforts by focusing on tests (automated) that manual testing is incapable of performing. It can be considered a vital part of software development, which preserves the analytical skills of manual testing procedures. Automated testing does not undermine the value of manual testing, as they are inextricably linked and complementary to each other. It is possible to create software that converts any type of manual software test into an automated test, but it has been observed that although this can be possible, not all tests are worth automating. Several factors must be considered before deciding what and when to automate.

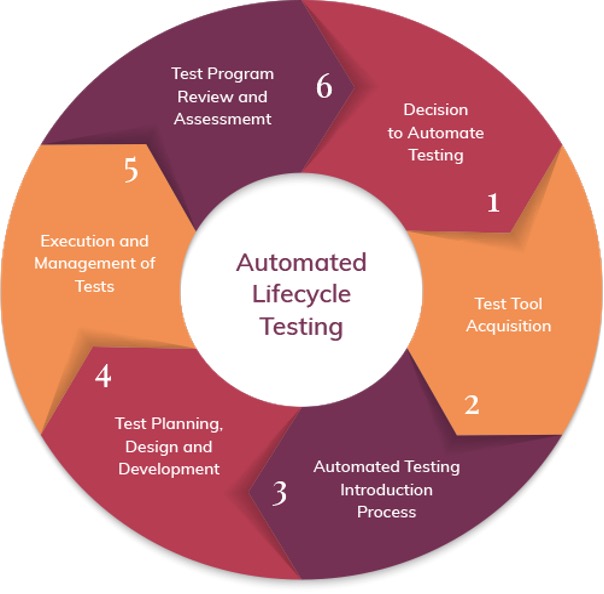

The use and application of software technology throughout the Software Testing Lifecycle (STL) is important which increases STL efficiencies and effectiveness. Automation efforts, which are applied across the entire STL, are referred to as automation system testing with a focus on automating integration and system testing efforts. The overall goal of an automation effort is to design, develop, and deliver an automated test and retest capability that improves testing efficiencies. If AST is successfully implemented, it can result in a significant reduction in the cost, time, and resources associated with traditional test and evaluation methods for dense software systems.

Automation Software Testing Checklist

-

Delayed testing should be avoided

There are numerous and endless test deadlines that appear in an integrated testing environment. Testing is frequently blamed for missed deadlines, over-budget projects, discovered production flaws, and lack of innovation. The real culprits are often inefficient system engineering processes, such as the black-box approach, in which millions of software lines of code are developed. It includes vast amounts of functionality, which is handed over to a test team that can prove to be a time-consuming task.

Some other factors, which are responsible for delayed testing, are summarized below:

- Poor development practices: It results in codes that necessitate lengthy and repetitive fix cycles.

- Lack of unit testing: The more effective the unit testing efforts, the smoother and shorter the system testing efforts.

- Inefficient construction methods: The processes for building and releasing software should be automated. Lack of automation can make software time-consuming and prone to errors

- Unrealistic deadlines: Most often, deadlines are planned without much thought given to how long it will take to develop or test specific software? Poor evaluating practices are not bearable to those who have over-promoted the product. Setting unrealistic deadlines is a sure way to ensure that deliverables fail.

-

Developers don’t system-test

Even though many developers perform unit testing in a test-driven software development environment, which generally perform a good job of testing their software modules. However, there is still a lack of developer integration and system testing. Some thinkers can still argue that the emphasis should be shifted away from testing and toward improving development processes. This can be acceptable, but even with the presence of the best processes and talented developers, software integration and system testing will always be necessary.

However, there are a couple of reasons why developers do not perform system testing:

- They typically lack the time or do not specialize in testing and testing techniques.

- Developers are frequently preoccupied with churning out new code and functionality while meeting unrealistic deadlines.

-

Perceived quality should be achieved

The perception problem cannot be solved by the best processes and standards. For example, even if the density of errors is minimum, the occurrence of frequent errors in a software system is perceived as poor quality by its customers. On the other hand, even if the defect density is high, infrequent bugs have almost no impact on operations and are perceived as good quality by an end-user.

The aim should be to improve perceived quality. This can be accomplished by concentrating testing on the most frequently used functionalities of the software.

-

How much of the test program can be automated?

Not all project tests can or should be automated. Automation testing should be performed on those target areas that are repeated most frequently and require the most labor. Tests that are only run once or infrequently will not be high-payoff tests to automate unless the test is difficult or time-consuming to run manually. Furthermore, if the AST needs to change each time, test cases that change with each delivery are unlikely to be highly paid off to automate. To implement AST in each project, an initial high-level assessment should be performed.

-

Maximum Return of investment

The final goal of any business venture is Return on Investment. Automated system testing should always be performed in a way, which maximizes the return of investment.

The primary points which ensure the best ROI is as follows:

- Creating automated tests necessitates more effort than manual tests.

- Cost maintenance of these tests must be considered; both automated and manual tests involve maintenance therefore, a cost comparison should always be analyzed amongst them.

- The real payoff comes from tests that are re-run regularly, which is a valid drawback of manual testing as increased test coverage and re-runs are something that manual testing can’t do.

- Automating the evaluation of test results can result in time-saving. However saving time by automating a system doesn’t seem worthwhile, if results are to be analyzed manually.

-

Need for speeding up the test effort and increasing efficiency

There is a dynamic relationship between speeding up the testing and product delivery time. If a particular test activity is not critical, it is very unlikely for a developer to automate it as it does not impact the delivery time. On the other hand, identifying a test or set of test program activities that are truly on the critical will result in its automation, which can significantly improve software delivery time. In general, increasing testing efficiency can help you speed up the testing process while also lowering costs.

A tester should emphasize certain questions before evaluating testing efficiency.

- What types of software defects should be avoided the most?

- Which set of test activities and techniques should be the most effective in detecting these types of software defects?

- Which tests are critical to be repeated consistently?

These are those tests, which run the most frequently because they cover a complex, problematic area and have the potential to uncover the most defects?

There are certain tests that manual testing cannot perform due to their complexity for example speed, concurrency, performance, etc. Hence, for these tests automation is required.

Following are some points, which should be considered to speed up test efforts.

- Developers should have the necessary skills for efficient testing

- Defects should be discovered early enough in the testing cycle

- Testing tasks timetable should be adequate: Even the most efficient testing team will fail if there isn’t enough time to implement and execute the best-laid plans for the test program.

The activities of your test program should be tailored to project needs. Once an inventory of test activities is completed and assessed against project needs, a tester can provide an objective recommendation of the benefits of automation areas, phases of the STL, which requires automation. Mostly, the test activities that contribute to multiple business needs are the most beneficial to automate.

-

Need for decreasing testing cost

One of the benefits of speeding up testing and increasing efficiencies is that testing costs can be reduced. A tester should be aware of testing costs to calculate the business benefit. Testing costs can be reduced if test activities are carefully analyzed and AST is applied to the appropriate areas.

A decrease in testing cost can be achieved by following certain points such as:

- Which tests add the most value, i.e. cover the highest-risk areas and must be performed at all costs?

- Do the tests that are the most expensive to execute add the most value?

- Which of these tests is most frequently repeated?

- How much time and effort is required to carry out these tests?

- What effect would it have on confidence in software delivery if these tests could be run more frequently because they are now automated?

- Expansion in test coverage.

-

Which development tool to consider?

To find the best testing tool for an organization necessitates a thorough understanding of the problem at hand, as well as the specific needs and requirements of the task at hand. Once the problem has been identified, it is possible to begin evaluating tools. A tester can choose between commercial and open-source solutions. Commercial solutions have their benefits, but there are some drawbacks of purchasing a tool from a software vendor, such as vendor lock-in, lack of interoperability with other products, lack of control over improvements, and licensing costs and restrictions.

According to the tool evaluation criteria, a tester assigns a weight to each tool criterion based on feature importance i.e. the more important a tool criterion is for the client, the higher the weight (1 to 5) and then ranks the various tools. The weight and rank are then multiplied to generate the final “tool score.” The features and capabilities of potential tools are then compared using the tool score to determine the best fit.

To evaluate the best tool for automated testing, above mention strategic steps can be summarized as followed.

- Determine the issue we’re attempting to solve.

- Simplify the tool’s requirements and criteria.

- Create a list of tools that meet the requirements.

- Based on importance or priority, assign a weight to each tool criterion.

- Score each tool candidate after evaluating it.

- Multiply the weight by the score of each tool candidate to obtain the tool’s overall score for comparison.

Some other criteria’s in evaluating tools are as follows:

- Is the tool able to meet the high-level requirements?

- Age: Is the tool new or has it been around for a while in the market?

For several years the tool that has been in use should be known.

- User base: A large user base indicates a good utility. A thriving open-source development community also means more people providing feedback and suggestions.

- Previous experience: The developer and the client may have had a positive experience with a specific tool that meets the high-level requirements, longevity, and user base criteria as previously described.

Conclusion

Software testing is a critical component of the software development process and constitutes each stage of the software lifecycle. A successful test strategy begins with careful consideration during the requirements specification process. Testing, like the other activities in the software lifecycle, presents its own set of challenges. The importance of effective, well-planned testing efforts will only grow as software systems become more complex.

LambdaTest Automation offers cloud-based automation testing on Selenium. You can automate your testing across 2000+ devices including mobiles, tablets, and desktop browsers. The tool offers parallel testing on the Selenium grid, thus ensuring a faster go-to-market launch.

Interesting Related Article: “Salesforce Test Automation And its Contribution to Profitable Business Operations“