In a groundbreaking advancement, artificial intelligence (AI) is being trained to read human emotions more effectively.

This development holds significant potential for industries like healthcare and education, where emotion recognition could play a key role in improving personalized care and support.

The Complex Nature of Emotions

Human emotions are inherently complex, making it difficult even for people to understand each other’s feelings accurately. The challenge of recognizing and quantifying emotions becomes even greater when machines attempt to interpret these feelings.

However, researchers are working hard to bridge this gap, using a combination of traditional psychological methods and cutting-edge AI technology to develop systems capable of detecting emotions in ways previously thought impossible.

According to Feng Liu, a lead researcher on the project and an expert in AI at East China Normal University, “This technology has the potential to transform fields such as healthcare, education, and customer service, facilitating personalized experiences and enhanced comprehension of human emotions.”

Feng Liu wrote about his study results in the Open Access, peer-reviewed, academic journal CAAI Artificial Intelligence Research (citation below).

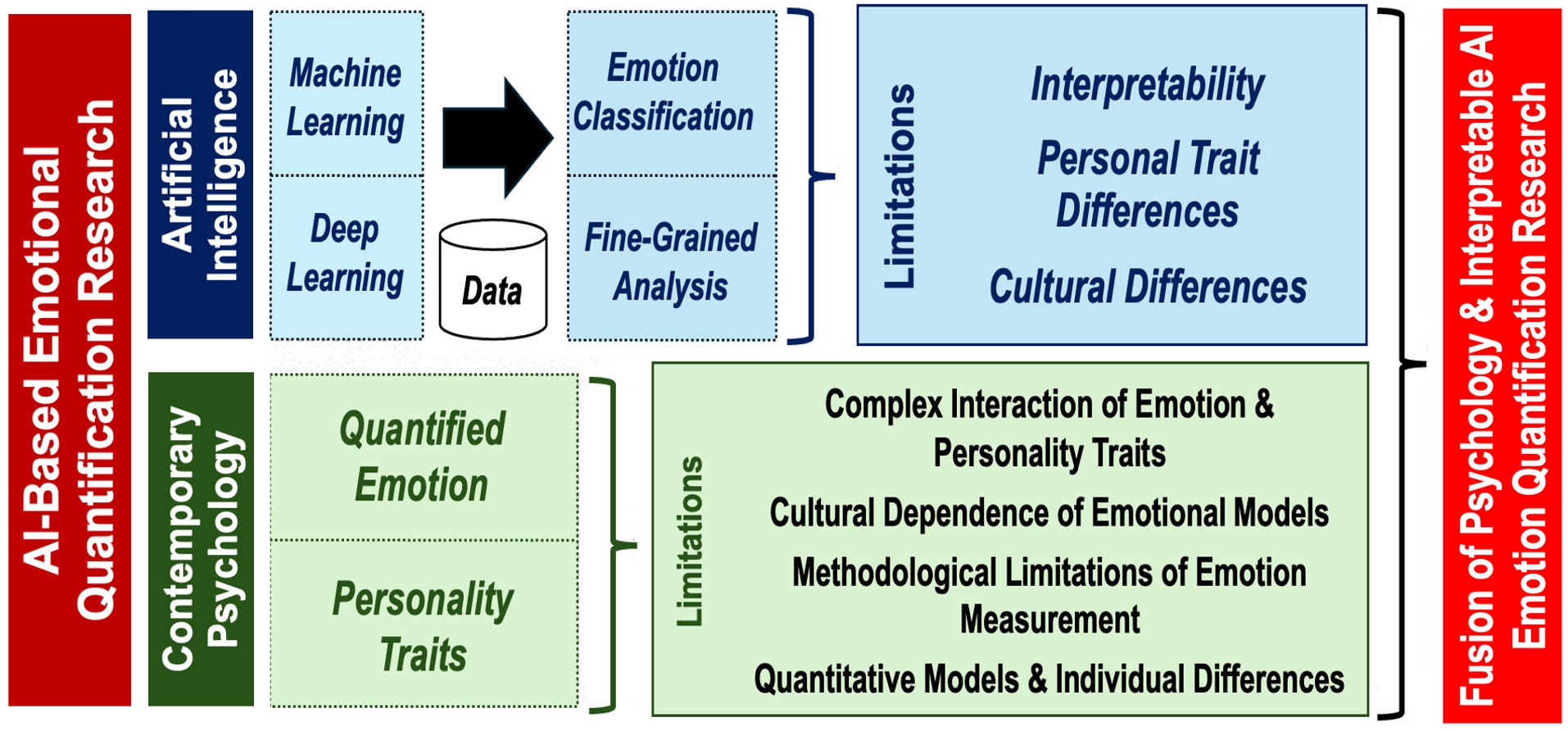

Using both contemporary psychological methods and AI tools can help achieve a clearer path to emotion quantification through artificial intelligence. Image courtesy of Feng Liu, East China Normal University.

Multi-Modal Emotion Recognition: How It Works

AI’s ability to understand human emotions relies on various inputs, including gestures, facial expressions, and even physiological signals.

For instance, facial emotion recognition (FER) technology analyzes facial cues to determine emotional states, while other methods use heart-rate variability or electrical skin responses to interpret levels of emotional arousal.

Some systems can even combine brain activity data from EEG scans with eye-tracking technology to read people’s expressions.

A major advancement in this field is the use of multi-modal emotion recognition, which integrates information from multiple senses such as sight, sound, and touch.

This fusion of data gives AI a more holistic understanding of emotional states, helping it to generate accurate and meaningful responses.

“It is believed that interdisciplinary collaboration between AI, psychology, psychiatry, and other fields will be key in achieving this goal and unlocking the full potential of emotion quantification for the benefit of society,” Liu said.

By combining expertise from various fields, the researchers hope to enhance AI’s capacity to respond appropriately to different emotional cues.

A Tool for Mental Health

With mental health becoming an increasingly important issue, emotion-quantifying AI offers an exciting opportunity to monitor psychological well-being.

These systems can be used to create personalized experiences tailored to an individual’s emotional state, which could help in providing mental health care without the need for constant human interaction.

AI could assess a person’s mental health by analyzing a variety of emotional signals over time.

For example, changes in heart rate, facial expressions, and even speech patterns could indicate shifts in mental well-being. By recognizing these signs early, AI may provide timely intervention or support.

Ethical Concerns

However, with these advancements come important ethical concerns. Issues such as data privacy and cultural sensitivity must be carefully managed to ensure that emotion-recognition technologies are used safely and transparently.

“Data handling practices and privacy measures taken by the entities using this type of AI will have to be stringent,” emphasized Liu .

The Future of Emotion Recognition AI

While the potential of emotion-quantifying AI is exciting, there are still challenges to overcome.

AI systems must be able to adapt to cultural differences in how emotions are expressed and understood. Additionally, privacy concerns regarding sensitive emotional data must be addressed before widespread adoption can occur.

Despite these challenges, the fusion of AI with fields like psychology and psychiatry could lead to significant breakthroughs in emotion recognition and quantification.

The research being conducted by Feng Liu and his team could shape the future of human-computer interaction, making technology more responsive to human needs and emotions.

Final Thoughts

AI’s ability to understand human emotions is no longer just a concept but a fast-approaching reality.

By combining various technologies and interdisciplinary research, AI could revolutionize healthcare, education, and other sectors by creating more empathetic and responsive systems tailored to individual emotional needs.

Citation

Liu F. Artificial Intelligence in Emotion Quantification : A Prospective Overview. CAAI Artificial Intelligence Research, 2024, 3: 9150040. https://doi.org/10.26599/AIR.2024.9150040