According to Anthropic, there is an urgent need to have regulatory frameworks in place to address the growing risks of artificial intelligence (AI) misuse.

AI is already excelling at mathematics and programming, and is getting better at reasoning. Given the current AI advancement rate, it will only become smarter and be capable of carrying out more complex tasks.

There are legitimate concerns about it being misused in dangerous fields.

The next 18 months are critical for policymakers to establish regulations to prevent potential risks.

In response, Anthropic introduced its “Responsible Scaling Policy” in 2023, which was recently updated in October 2024.

Other industry leaders also recognize the need for more vigilance when it comes to the misuse of AI. Recent research by Google DeepMind showed how generative AI is being exploited with tactics that range from targeted misinformation to large-scale influence operations. Anthropic and Google’s combined insights show how even the largest players in the field see the need for robust regulatory frameworks to mitigate these risks.

Understanding the Responsible Scaling Policy (RSP)

What is RSP?

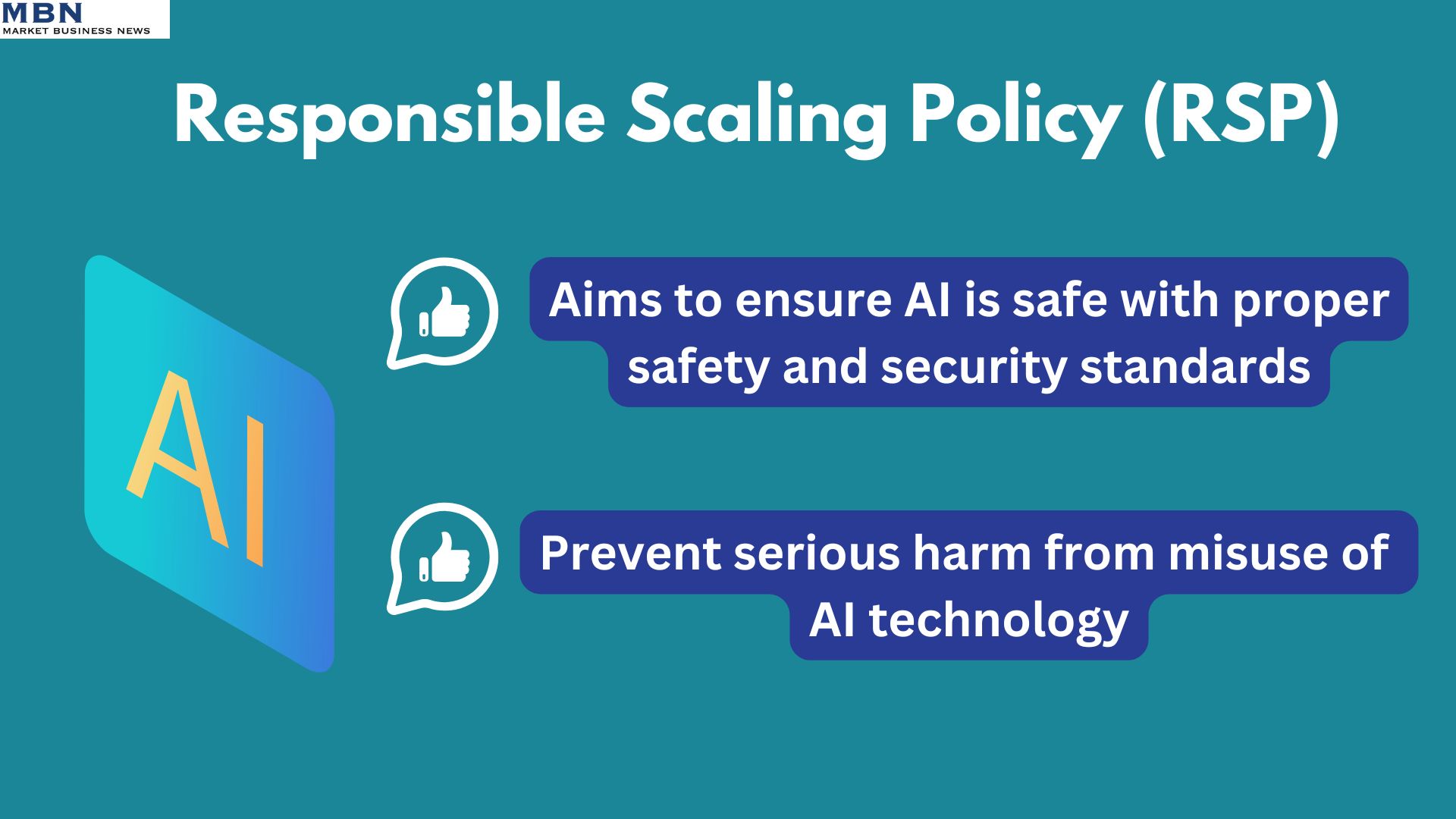

The Responsible Scaling Policy (RSP) framework, created by Anthropic, is designed to ensure that the development of AI is safe and aligns with essential safety and security standards.

The RSP aims to help prevent serious harm that could arise from AI technology by promoting responsible practices. It encourages the development of AI in a careful and adaptable way, continually checking for and reducing potential risks.

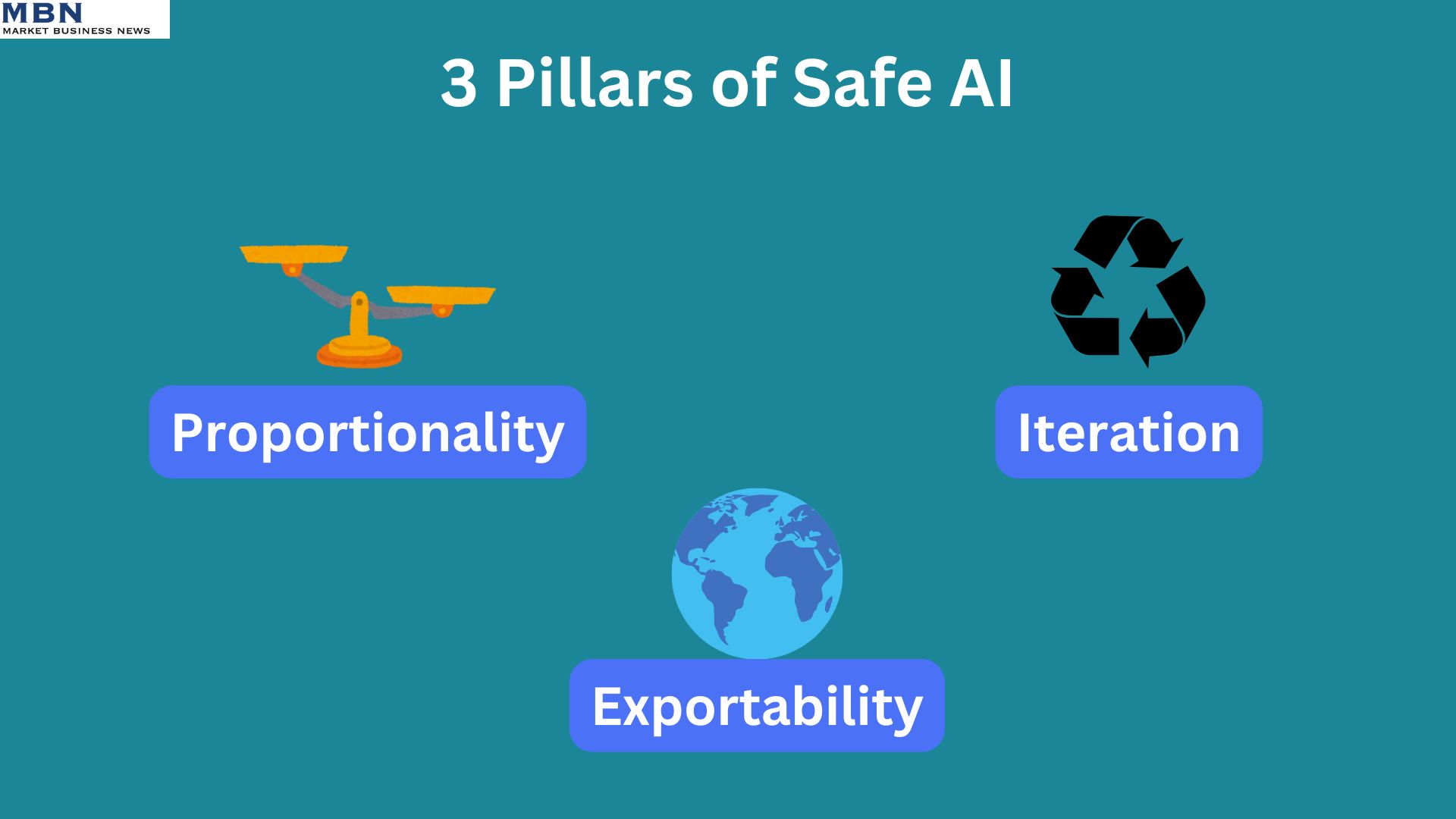

The framework is built around three main ideas:

1. Proportionality: The safety measures for AI should match how much risk the technology brings. In other words, the more potentially risky the AI is, the stronger the safety steps should be.

2. Iteration: As AI technology continues to develop, our safety measures also need to change and improve. With iteration, we’re prepared if new challenges arise.

3. Exportability: The guidelines for AI safety are designed to be adaptable, so they can be used in different industries and countries. This aims to create a shared standard for keeping AI safe worldwide.

Capability Thresholds and Required Safeguards

Capability Thresholds

Anthropic has come up with the idea of “Capability Thresholds.” These are guidelines that help identify when an AI model could become risky and needs extra safety precautions. If an AI can help create dangerous substances or technology—like chemical, biological, radiological, and nuclear weapons—or if it can carry out advanced research on its own, it falls into this risky category.

As these technologies improve, we need more robust security measures and restrictions on how they can be used to avoid potential harm.

ASL Standards

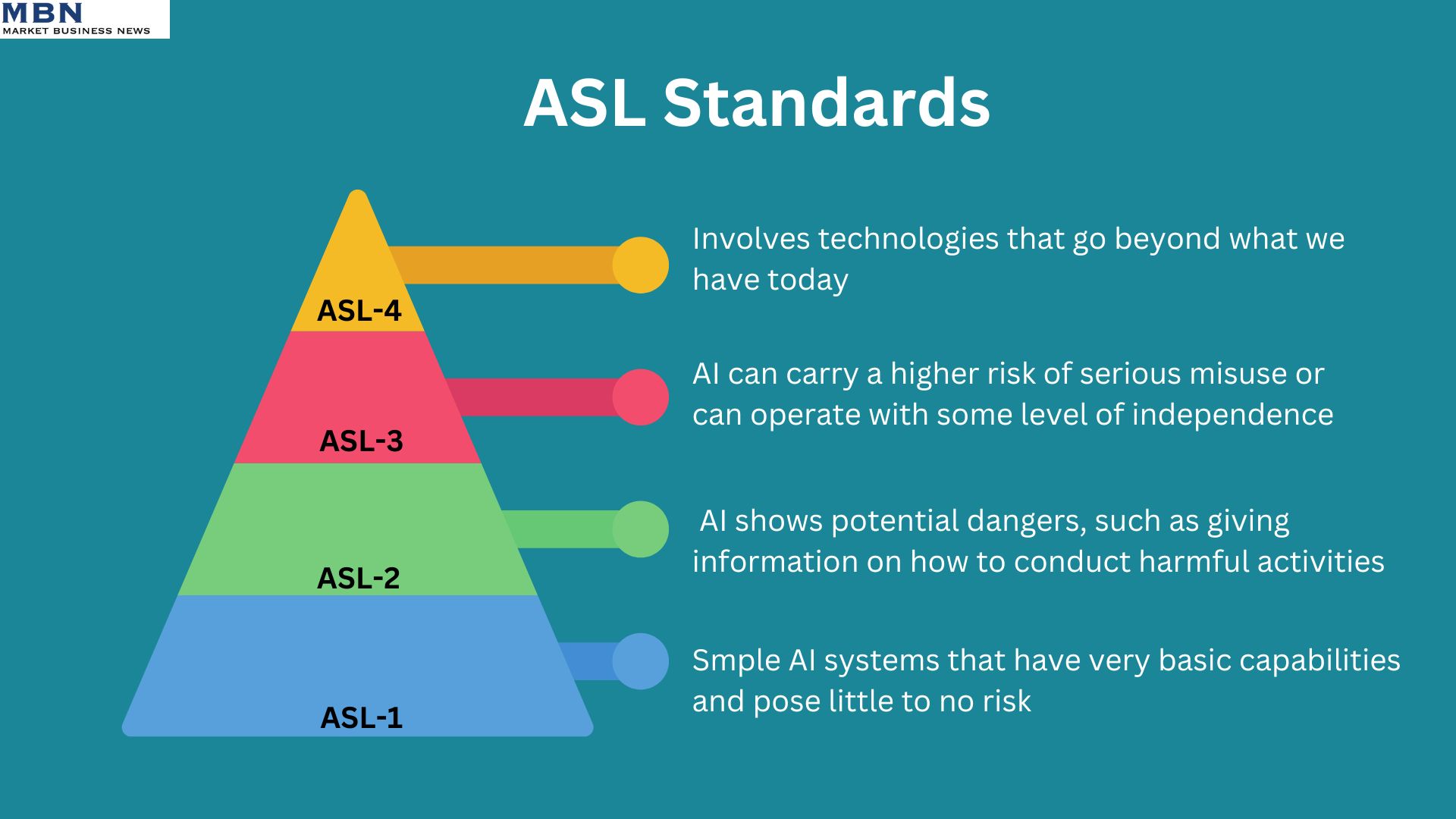

Anthropic has created a system to classify how safe AI technologies are. This system includes guidelines that AI models must follow to keep them secure from unauthorized access and to prevent misuse.

There are four AI Safety Levels (ASLs):

ASL-1: This level is for simple AI systems that have very basic capabilities and pose little to no risk, like basic chess-playing programs. Only simple safety measures are necessary at this stage.

ASL-2: This level focuses on AI models that are starting to show potential dangers, such as giving information on how to conduct harmful activities. However, the information might be unreliable and is often similar to what you can find readily available on search engines.

ASL-3: At this level, AI systems can carry a higher risk of serious misuse compared to regular resources or can operate with some level of independence. This means stricter safety measures and controlling how these systems are used are needed, like limiting who can access them and keeping their underlying structures secure.

ASL-4 and Higher: These levels are set aside for future AI models that will have advanced abilities and could present significant risks. The specific rules and safety measures for these advanced levels haven’t been established yet. This is because they involve technologies that go beyond what we have today.

These guidelines help ensure that AI technologies are used safely and responsibly.

The Role of Regulation in AI

Anthropic urges the need for rules around AI, stating that advanced AI could lead to severe problems without proper regulations. They believe that rules should focus on essential safety measures instead of limiting specific uses of AI. This way, we can keep innovation alive while ensuring a safe environment for everyone.

Any regulatory framework should be flexible and able to change as technology evolves, Anthropic says. They argue that regulations should be designed to adapt to new AI developments.

This approach would help manage the risks of AI while avoiding overly strict rules that could prevent us from using its benefits effectively.