Researchers warn that current laws and regulations regarding artificial intelligence leave individuals’ health data vulnerable to misuse. In other words, they do not safeguard individual people’s confidential health information.

Researchers from MIT, the University of California, Berkeley, Tsinghua University, and the University of California, San Francisco, carried out a study on current laws and regulations regarding artificial intelligence advances.

They wrote about the findings in the journal JAMA Network Open (citation below). JAMA stands from the Journal of the American Medical Association.

According to the authors, as far as keeping people’s health status private in the face of AI development is concerned, current laws and regulations fail miserably.

The letters AI stand for Artificial Intelligence. The term refers to software that makes robots or computers think and act like human beings. Some experts insist that it is only AI if it performs at least as well as a human.

Legislation regarding privacy of health data

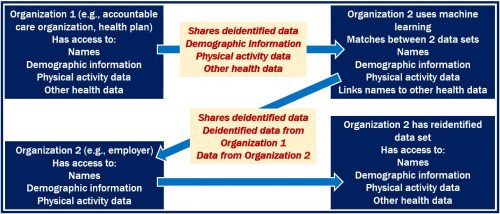

The authors showed that with AI they could identify individuals by learning about daily patterns in step data. Data collected by smartphones, smartwatches, and activity trackers, for example, can be correlated to demographic data.

The researchers mined two years’ worth of data covering 15,000 Americans. They concluded that lawmakers must revisit and rework the HIPAA of 1966. HIPAA stands for the Health Insurance Portability and Accountability Act.

Laws don’t protect health data as much as you’d think

Anil Aswani, an Assistant Professor in Industrial Engineering and Operations Research (IEOR) at the University of California, at Berkeley, said:

“We wanted to use NHANES (the National Health and Nutrition Examination Survey) to look at privacy questions because this data is representative of the diverse population in the U.S.”

“The results point out a major problem. If you strip all the identifying information, it doesn’t protect you as much as you’d think. Someone else can come back and put it all back together if they have the right kind of information.”

“In principle, you could imagine Facebook gathering step data from the app on your smartphone, then buying health care data from another company and matching the two. Now they would have health care data that’s matched to names, and they could either start selling advertising based on that or they could sell the data to others.”

Problem is not with devices

Prof. Aswani said that the problem is with how the health data that the devices capture can be misused. In other words, the problem is not with the devices themselves. ‘Misuse’ includes, for example, selling health data on the open market.

Regarding these devices, Prof. Aswani said:

“I’m not saying we should abandon these devices,” he said. “But we need to be very careful about how we are using this data. We need to protect the information. If we can do that, it’s a net positive.”

The study focused on step data. However, the findings suggest there is a broader threat to health data privacy.

HIPAA does not cover many tech companies

Prof. Aswani said:

“HIPAA regulations make your health care private, but they don’t cover as much as you think. Many groups, like tech companies, are not covered by HIPAA, and only very specific pieces of information are not allowed to be shared by current HIPAA rules.”

“There are companies buying health data. It’s supposed to be anonymous data, but their whole business model is to find a way to attach names to this data and sell it.”

AI advances make it easier for companies to gain access to individuals’ health data, the authors said. The temptation for them to use it in unethical ways will grow. The temptation to use that data illegally will also increase.

Lenders, credit card firms, and employers could use AI to discriminate based on, for example, disability status or pregnancy.

We need new legislation to protect health data

Prof. Aswani said:

“Ideally, what I’d like to see from this are new regulations or rules that protect health data. But there is actually a big push to even weaken the regulations right now. For instance, the rule-making group for HIPAA has requested comments on increasing data sharing.”

“The risk is that if people are not aware of what’s happening, the rules we have will be weakened. And the fact is the risks of us losing control of our privacy when it comes to health care are actually increasing and not decreasing.”

Citation

“Feasibility of Reidentifying Individuals in Large National Physical Activity Data Sets From Which Protected Health Information Has Been Removed With Use of Machine Learning,” Liangyuan Na, BA; Cong Yang, BS; Chi-Cheng Lo, BS; Fangyuan Zhao, BS; Yoshimi Fukuoka, PhD, RN; and Anil Aswani, PhD. JAMA Network Open, 2018;1(8):e186040. DOI: 10.1001/jamanetworkopen.2018.6040.