The Facebook emotion contagion experiment carried out on nearly 700,000 unsuspecting users has triggered an investigation by the US Federal Trade Commission. Electronic Privacy Information (EPIC) filed a complaint with the FTC demanding an investigation.

According to the complaint, Facebook’s secretive and non-consensual use of personal data to carry out an ongoing psychological experiment was an action that deliberately messed with people’s minds.

700,000 users targeted

Facebook changed the News Feeds of 700,000 users to prompt negative or positive emotional responses. The psychological experiment was a joint venture between Facebook and researchers from the University of California, San Francisco, and Cornell University. The complaint says they did not follow ethical protocols for human subject research.

EPIC says Facebook failed to state in the Data Use Policy that its user data would be utilized in an experiment. The social media giant did not tell users that the researchers would have access to their personal information.

The US privacy pressure group wrote “at the time of the experiment, Facebook was subject to a consent order with the FTC which required the company to obtain users’ affirmative express consent prior to sharing user information with third parties.”

According to EPIC, Facebook’s conduct was both:

- a violation of the Commission’s 2012 Consent Order, and

- a deceptive trade practice under Section 5 of the FTC Act.

EPIC asks the FTC to impose sanctions and make Facebook publish the algorithm by which it generates the News Feed for all its users.

Facebook social contagion experiment

The study, titled “Experimental evidence of massive-scale emotional contagion through social networks” and published in the journal Proceedings of the National Academy of Sciences (PNAS), suggests that by tweaking what is allowed into a Facebook user’s News Feed, his or her emotions can be manipulated.

Some of the nearly 700,000 users received more good news in their feeds while others got more bad news. None of them knew they had been selected as guinea pigs.

The research team was trying to determine whether Facebook could make people feel unhappy or happy by creating unrealistic expectations of how good/bad their lives should be. Some of the people being monitored were depressed when their news feeds were manipulated negatively.

The researchers did not ask the guinea pigs how they felt, but rather analyzed their Facebook inputs according to the words they selected to indicate mood.

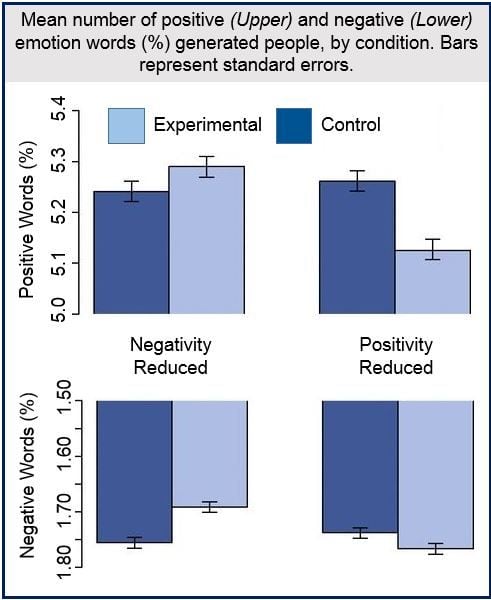

They found that when positive expressions in their News Feeds were reduced, users produced fewer positive posts and a higher number of negative posts, while those with fewer negative expressions in their feeds produced fewer negative and more positive posts.

In an Abstract in the journal, the authors concluded:

“These results indicate that emotions expressed by others on Facebook influence our own emotions, constituting experimental evidence for massive-scale contagion via social networks.”

“This work also suggests that, in contrast to prevailing assumptions, in-person interaction and nonverbal cues are not strictly necessary for emotional contagion, and that the observation of others’ positive experiences constitutes a positive experience for people.”

(Source: Proceedings of the National Academy of Sciences)

Manipulating how people feel can potentially lead to devastating consequences. Studies have shown that more than half of all suicides are preceded by a mood disorder.

A person with cardiovascular problems is more likely to suffer complications if they develop depression or their depression becomes more severe.

The researchers did not screen any of the 700,00 guinea pigs for mental health status before the experiment began.

Facebook has more than 1.28 billion users. Carrying out mood experiments on large population groups needs to be strictly monitored, psychologists say.