Chatbots are becoming part of everyday life, from virtual assistants on our phones to automated helpers on websites. But what exactly are chatbots, and how do they work?

This article will explore what chatbots are, the basics of how they function (including concepts like AI, natural language processing, and machine learning), a brief history of their development, modern advances in the technology, real-world examples, and how chatbots are used across different industries.

We’ll break down technical terms into simple language and use examples to make things clear.

Definition

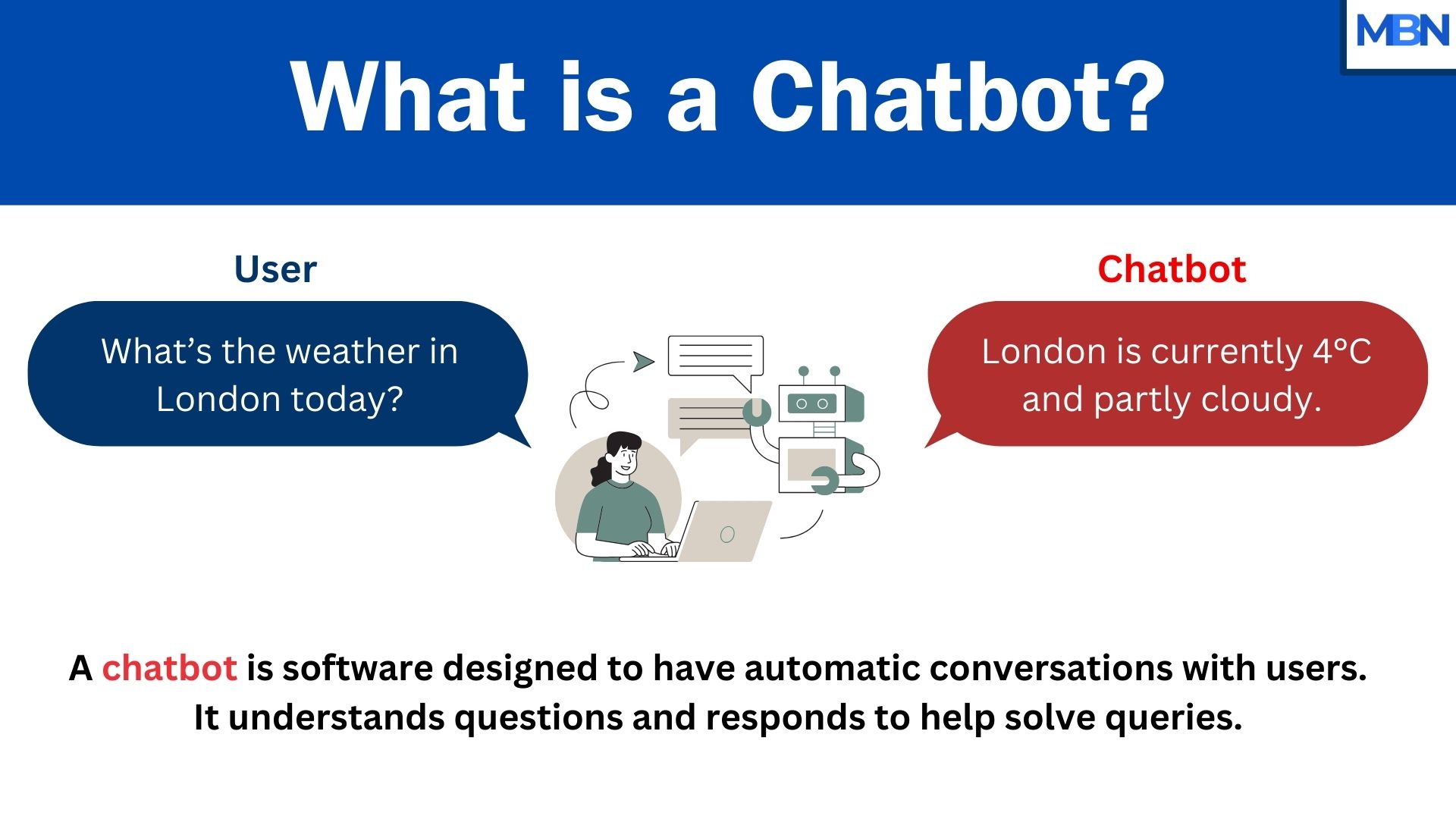

A chatbot is a computer program designed to simulate conversation with human users. In other words, it’s a software application that can talk (or text-chat) with you in a natural way. At the most basic level, it is simply a program that understands what you say (whether you type or speak) and responds in a way that feels like you’re talking to another person.

For example, when you type a question into an online customer support chat and get an answer, or when you ask your phone’s voice assistant about the weather, you’re talking to a chatbot.

Chatbots can be very simple or quite advanced.

A simple chatbot might just answer common questions with single-line responses. A more sophisticated chatbot (sometimes called a virtual assistant) can handle complex requests and even learn from interactions to improve over time.

You’ve probably interacted with chatbots without even realizing it. For example, if you visit a website and a chat box pops up saying “Hello! How can I help you?”, that’s likely a chatbot. If you use an app to request a ride or order food by chatting, there’s a chatbot at work behind the scenes. And familiar voice assistants like Siri on the iPhone or Alexa in Amazon Echo speakers are essentially chatbots that you can talk to by voice.

As they never need breaks, vacations, and don’t get ill, they make it possible for SMEs and large corporations to offer 24-hour customer support at scale.

How Do Chatbots Work?

Chatbots work through a combination of predefined rules and artificial intelligence (AI). Some chatbots operate on rules alone: they have a fixed set of questions or keywords they can respond to. For example, a rule-based chatbot might be programmed to respond with store hours whenever it sees a message with the word “hours.” These are like interactive FAQ systems.

Other chatbots use AI to understand language and generate responses more flexibly. AI, in simple terms, is the ability of a computer to perform tasks that normally require human intelligence. Within AI, there is machine learning (ML) – an approach where algorithms learn from data and examples rather than following only hard-coded rules.

Machine learning lets machines learn from data without being explicitly programmed. Instead of a developer anticipating every possible user question, an AI-driven chatbot can generalize from patterns it learned during training. One important technology that AI chatbots use is Natural Language Processing (NLP).

NLP is a branch of AI that helps computers understand human language – both the words and the meaning behind them. This means an NLP-powered chatbot doesn’t just look for a keyword; it tries to interpret the user’s full sentence to figure out what the user wants. NLP techniques allow chatbots to parse grammar, identify context or intent (for example, recognizing that “I’m looking for a pizza place” is a restaurant search request), and even deal with different phrasings of the same question.

Once a chatbot understands a user’s question or message, it then needs to decide how to respond.

Here is where the difference between simple and advanced chatbots really shows:

Rule-Based Chatbots

These follow scripted paths or decision trees. They might have a list of if-else rules. For example: “If the user says ‘account balance,’ then show the balance.” These chatbots can only handle specific scenarios they’ve been pre-programmed for. They don’t truly understand open-ended language; they look for particular triggers.

These task-oriented chatbots focus on one function and provide answers from a fixed set of options. They can be effective for common questions (like a bank’s bot that answers “What time do you open?”) but will not handle unexpected inputs well.

These are currently the most commonly used type of chatbot for customer support FAQs.

AI-Powered Chatbots

These, more advanced chatbots, use machine learning and large datasets to generate responses on the fly. These are sometimes called conversational or predictive chatbots, or digital assistants. They are contextually aware. What this means is that they can keep track of what has been said earlier in the conversation.

AI-powered chatbots add a layer of learning – they can adjust their answers based on what they learned from new data or past conversations. Developers can also continually train AI chatbots by providing them feedback (for instance, flagging when an answer was not useful, so the bot learns not to repeat that).

Because of machine learning, an AI chatbot can get “smarter” and more accurate over time in understanding queries and providing helpful answers. In fact, one study says that certain LLMs are already “strikingly close to human-level performance” in many aspects.

A Brief History of Chatbots

The concept of a machine chatting like a human has been around for decades. In fact, in 1950, British mathematician Alan Turing proposed an idea that became known as the Turing Test. The Turing Test was a thought experiment: if a person has a text conversation with an unseen partner, could they tell if it’s a machine or a human? If the computer could fool the person consistently, it would be a sign of intelligence.

ELIZA

This idea was foundational for thinking about conversational computers and AI, planting the seed for chatbots. The first recognized chatbot program was developed in 1966 by MIT professor Joseph Weizenbaum. He created a chatbot named ELIZA, which famously simulated a psychotherapy conversation.

ELIZA used very simple methods – mainly pattern matching and substitution rules – to give the illusion of understanding. For example, if the user said, “I feel sad,” ELIZA might respond, “Why do you feel sad?” by following a rule to turn statements into questions.

People were amazed to chat with a computer, but ELIZA wasn’t truly intelligent. It had no understanding of the content; it simply mirrored back parts of what the user said according to scripted patterns. Weizenbaum himself was surprised that some people became emotionally attached to ELIZA, attributing human-like understanding to it.

In reality, ELIZA was limited and would often produce irrelevant or nonsensical responses if taken outside its narrow script, and it certainly couldn’t pass the Turing Test.

Arrival of A.L.I.C.E.

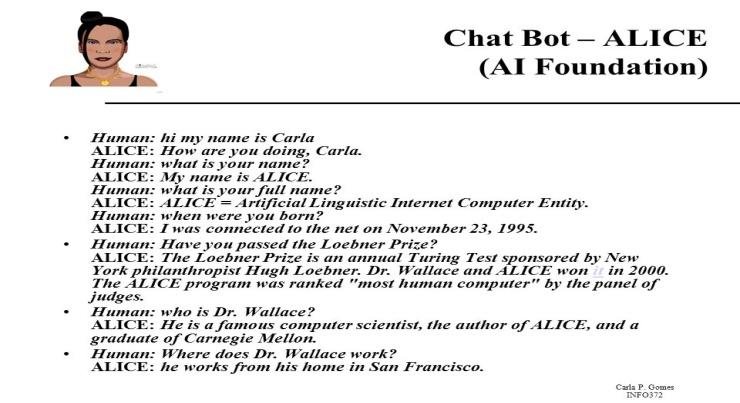

After ELIZA, chatbot development continued slowly but steadily. Another early chatbot was PARRY, created in 1972 by psychiatrist Kenneth Colby, which simulated a person with paranoid schizophrenia. In 1995, Richard Wallace launched a chatbot named A.L.I.C.E. (Artificial Linguistic Internet Computer Entity). A.L.I.C.E. was inspired by ELIZA but more advanced; it used a structured knowledge base of “if someone says X, respond with Y” rules that contributors around the world could add to.

Over time, this made A.L.I.C.E. more flexible than ELIZA, because it had a growing list of possible responses and could cover more topics . It still wasn’t truly understanding language, but it was a step forward in making chatbot replies more varied.

Jabberwacky

In 1997, a chatbot called Jabberwacky was created by developer Rollo Carpenter. Jabberwacky (which later evolved into the web-based Cleverbot in 2008) took a different approach: it learned by having conversations with users. Instead of relying only on predefined responses, it stored user responses and began to reuse them in appropriate contexts.

This means Jabberwacky/Cleverbot essentially learned how people speak by example – a simple form of machine learning. Over the years, interacting with millions of people online, Cleverbot became known for sometimes surprisingly human-like (and sometimes quite odd) conversations.

It still didn’t understand meaning in a deep way, but it could mimic casual conversation fairly well by drawing on its vast memory of dialogues. By the 2000s and 2010s, chatbots started to move into more practical, everyday roles.

SmarterChild

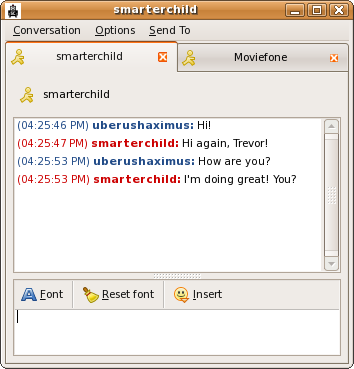

In 2001, a chatbot named SmarterChild became popular on AOL Instant Messenger (an online chat platform of that era).

SmarterChild could talk with users about a variety of things and even play simple text games. Millions of people had SmarterChild as a “buddy” contact for entertainment or quick info (like checking the weather or facts).

This was a hint of how chatbots could integrate into popular communication channels.

Siri

A major leap came in the 2010s with the introduction of voice-activated assistants on consumer devices. In 2011, Apple launched Siri on the iPhone 4S.

Siri let users to speak natural language commands and questions (“Do I have any meetings today?” or “Where is the nearest gas station?”) and would respond with answers or by performing actions like setting reminders.

Alexa and Google Assistant

In 2014, Amazon introduced Alexa, which came with the Amazon Echo smart speaker. Alexa, and later Google’s Google Assistant (originally launched in 2016 alongside Allo), showed that chatbots weren’t just for text chat – they could be embedded in devices around our homes and use voice interaction.

These voice assistants are essentially chatbots with speech recognition (to understand spoken words) and speech synthesis (to talk back).

Siri, Google Assistant, Alexa, and similar products brought chatbots into the mainstream. Suddenly, millions of ordinary users were asking questions to a “talking computer” in their daily lives, something that had been science fiction a few decades prior.

The LLM Revolution

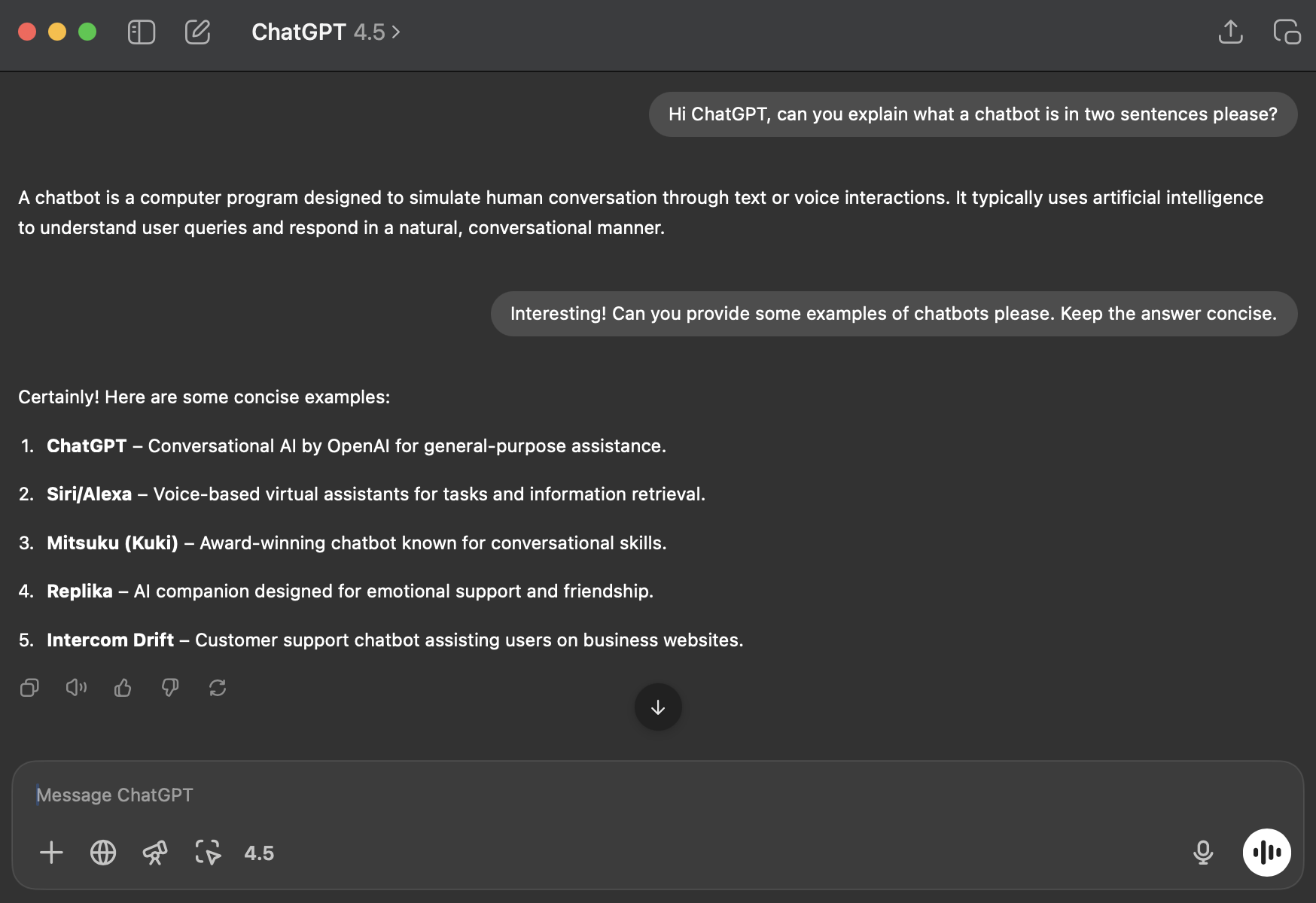

In recent years, chatbots have advanced dramatically thanks to improvements in AI. A landmark event was the release of ChatGPT by OpenAI in late 2022. ChatGPT is a chatbot powered by a type of AI model called a large language model (LLM). It was trained on billions of words of text (like books, articles, and websites) to learn patterns of human language.

As a result, ChatGPT can generate detailed responses and engage in multi-turn conversations on a wide range of topics. For example, you can ask ChatGPT to explain a science concept, help you write a story, or have a conversation about history. It often produces answers that read very naturally, sometimes making it hard to tell that the response was generated by a computer.

This is a big step toward chatbots that can “think” in language more like a human would. It can also produce AI images (below is an example).

However, ChatGPT and similar AI chatbots are not perfect – they don’t truly “understand” facts the way humans do, and they can sometimes give wrong or nonsensical answers with a confident tone. In fact, ChatGPT can occasionally produce false information (a mistake people call an AI hallucination) or show bias in its responses.

Despite these challenges, the success of ChatGPT (it reached tens of millions of users within months of launch) has spurred huge interest in developing even more capable chatbots. It represents how far chatbot technology has come: from ELIZA’s simple scripts to ChatGPT’s sophisticated AI that can write an essay, answer complex questions, or carry on a lengthy dialogue.

Modern Developments in Chatbot Technology

Modern chatbot technology builds upon decades of research in AI and leverages the huge advances in computing power and data availability.

There are a few key developments that make today’s chatbots far more powerful than those of the past:

Conversational AI and Generative Models

Traditional chatbots either chose from pre-written answers or followed flowcharts. Today’s most advanced chatbots use generative AI, meaning they generate responses word-by-word based on probability and patterns, rather than picking a reply from a fixed list. These chatbots are powered by large AI models (like ChatGPT-4.5 of OpenAI, Gemini of Google, or Claude of Anthropic) that have learned language by analyzing enormous amounts of text.

This allows them to produce original sentences that may never have been written before, tailored to the user’s query. In short, if you ask something unusual, a generative AI chatbot can attempt an answer on the fly.

These chatbots can also handle follow-up questions or context. For example, you might ask “What’s the capital of France?”, get the answer “Paris,” and then ask “How many people live there?” – a good AI chatbot will understand “there” means Paris, keeping the context from the previous question.

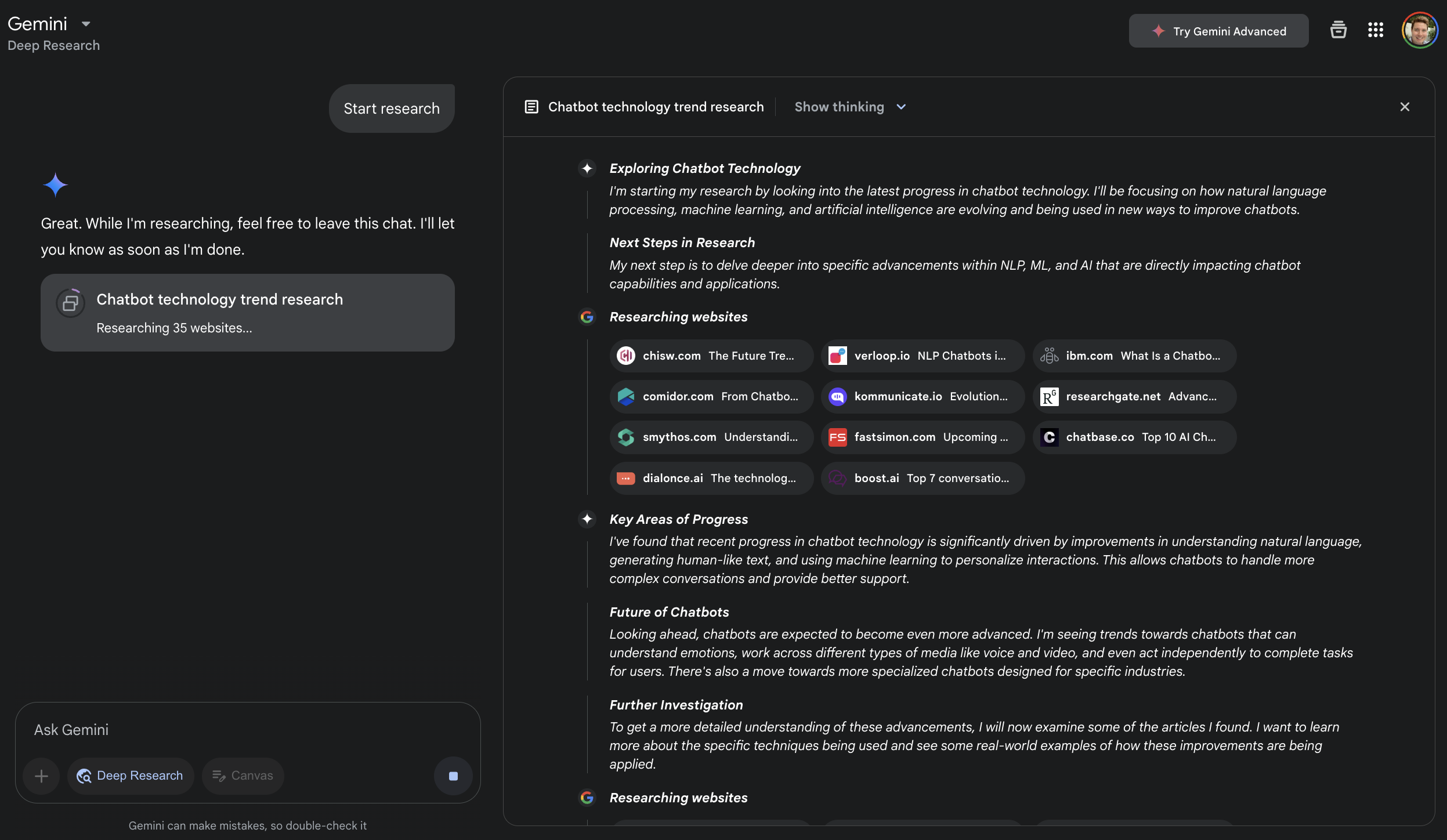

Some models can even conduct research. In the image below, Google’s Gemini is carrying out “Deep Research” on recent chatbot technology and even shows its line of “thinking”.

Improved Natural Language Understanding

Thanks to advanced NLP and specialized AI models, modern bots grasp user inputs much better. They can handle the nuances of human language – different phrasings, slang, or typos – better than older bots. For instance, whether you say “I need help booking a flight” or “Can you help me book a plane ticket?”, a smart travel bot today will recognize both as the same request. Some chatbots can even detect sentiment (are you angry or happy) and adjust their tone accordingly.

Machine Learning and Self-Learning

Many contemporary chatbots have the ability to learn from new data. This could be through automated training on conversation logs or via active learning (where developers feed the bot corrected answers for cases it got wrong). Some enterprise chatbot platforms allow a bot to automatically improve by analyzing which responses resolved customer inquiries successfully and which did not.

In the case of generative chatbots, they can’t learn new facts on their own immediately (they rely on training updates from developers), but other systems (like recommendation or support bots) might update their knowledge base continuously. As IBM notes, modern AI chatbots employ algorithms that automatically learn from past interactions how best to answer questions and improve conversation flow.

This means over time a chatbot can get better at its job, much like a human customer service rep gets better with experience.

Multimodal Capabilities

While early chatbots were text-only, many modern chatbots can handle voice input and output. For example, Google Assistant and Alexa can take spoken questions and respond with spoken answers, using speech recognition and synthesis.

Many AI systems can even handle images (for example, you can upload a photo and the chatbot can process what it is).

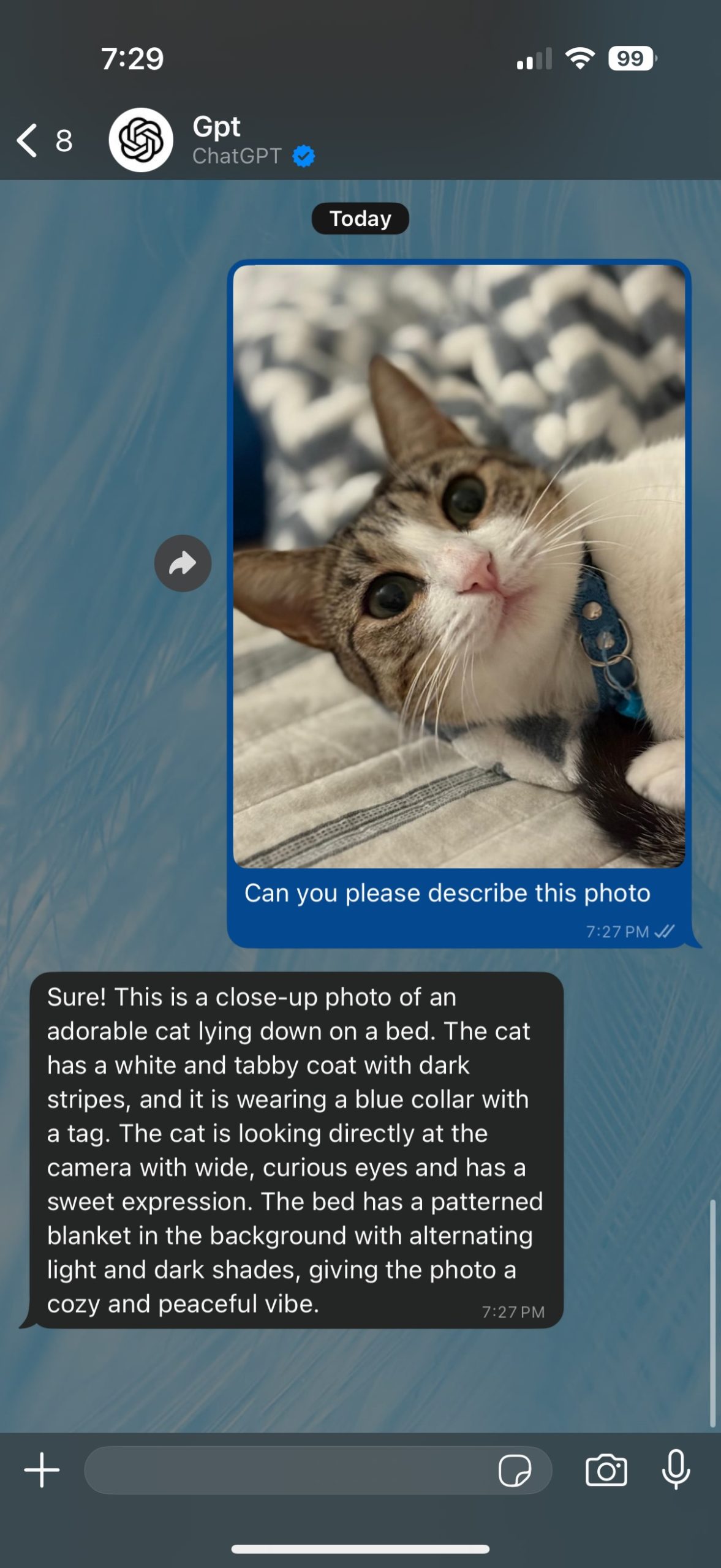

You can interact with ChatGPT on WhatsApp by sending text messages, uploading images, or sending voice notes and ChatGPT will reply with text responses. In this example, ChatGPT provided a detailed description of the photo of a cat that was sent.

Voice integration in particular is now common – the line between “chatbot” and “voice assistant” has blurred, since they use the same underlying tech with a different input/output method.

Deployment on Various Platforms

Today’s chatbots aren’t confined to a single chat window. They are used in messaging apps (Facebook Messenger bots, WhatsApp business bots), on websites, in mobile apps, and in smart speakers or other internet of things (IoT) devices.

Businesses can integrate chatbots into their customer service platforms so that whether a customer sends a Facebook message or a web chat, the chatbot is there to assist. This broad presence makes the chatbot experience more ubiquitous in daily life.

Context and Personalization

Modern chatbot systems can maintain context over a conversation and personalize responses. Maintaining context means if you ask a series of related questions, the bot remembers what you are talking about. Personalization means the bot can use information it knows about you (with permission) to tailor its answers. For example, a chatbot on a shopping site might recall your previous orders or preferences (“Since you bought sneakers last month, maybe you’d like these socks that are often paired with them”).

Advanced virtual assistants can “learn a user’s preferences over time” to provide recommendations or anticipate needs . This creates a more natural and useful interaction, as if the chatbot “knows” you in some way. All these developments have led to chatbots that feel much more natural and helpful.

However, it’s worth noting that despite these advancements, chatbots are not perfect. They can still make mistakes or misunderstand.

AI models like ChatGPT don’t truly think or understand in a human way; they predict likely responses based on patterns, which can sometimes produce incorrect results.

There are ongoing improvements being made to ensure accuracy, reduce biases, and allow users to better steer or correct chatbot outputs when they go off track.

The trend in chatbot technology is toward more human-like conversation and greater utility. With the rise of AI research and large-scale models, modern chatbots are far more powerful than the scripted bots of the past. They can handle queries across a wide range of domains, and they are becoming common in both consumer gadgets and business tools.

Concerns

However, chatbot development hasn’t come without its fair share of concerns and criticism.

Many experts have been vocal about the need to be careful with the deployment and availability of chatbots.

For example, research conducted by University of Cambridge academic, Dr Nomisha Kurian, found that AI chatbots have an “empathy gap” that puts young users at risk of distress or harm. Kurian urged developers and policy actors to make “child-safe AI” a priority.

“Children are probably AI’s most overlooked stakeholders,” said Dr Kurian. “Very few developers and companies currently have well-established policies on how child-safe AI looks and sounds. That is understandable because people have only recently started using this technology on a large scale for free. But now that they are, rather than having companies self-correct after children have been put at risk, child safety should inform the entire design cycle to lower the risk of dangerous incidents occurring.”