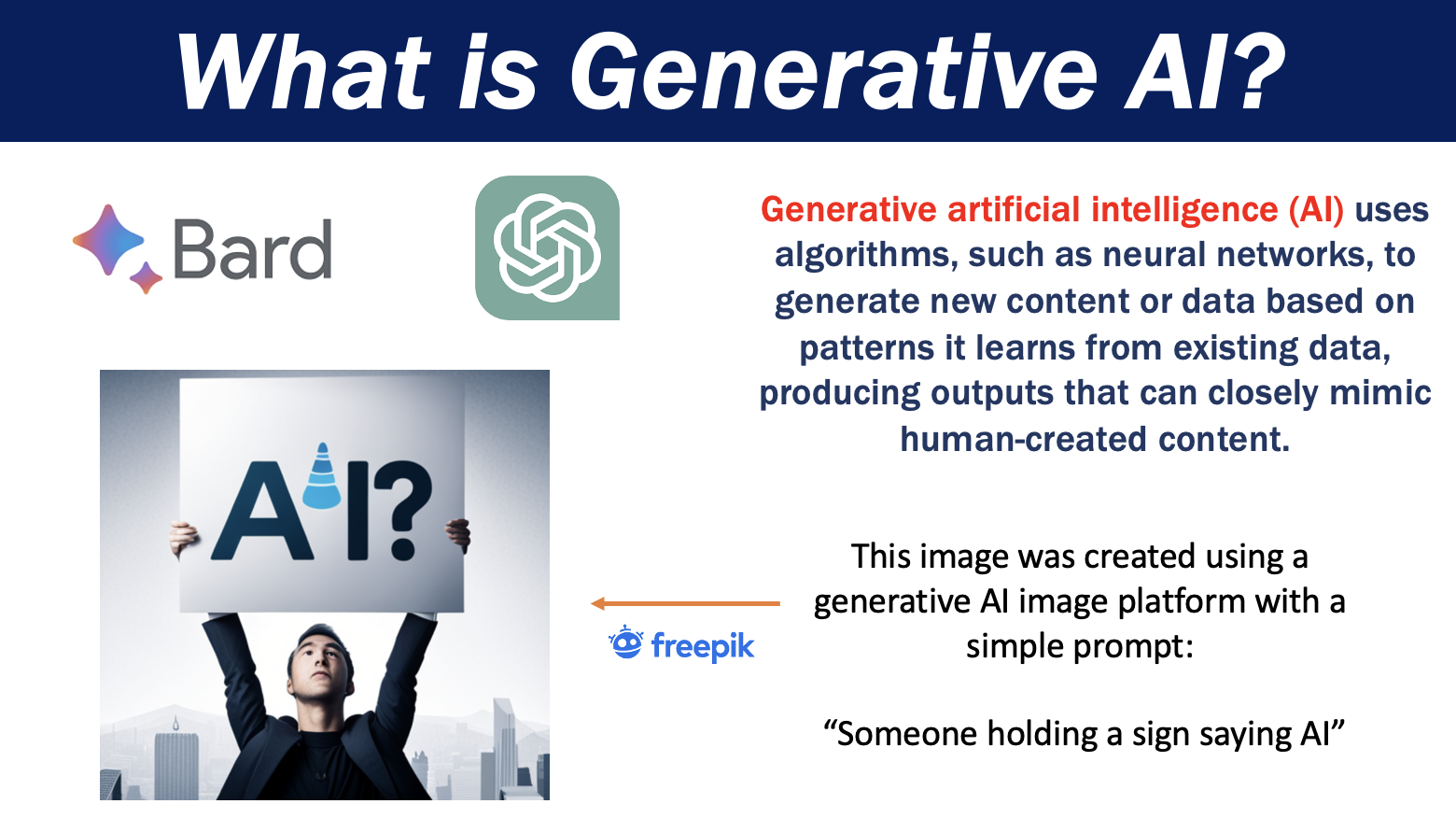

What is Generative AI? Definition and Examples

With the rapid evolution of technology, artificial intelligence (AI) has become a key player, transforming various sectors, including healthcare, finance, and entertainment. Among the various subsets of AI, Generative AI has recently been gaining significant attention, primarily due to its unique ability to create high-quality, original content. The concept of Generative AI, although complex, is reshaping the way we interact with machines and how machines interact with data.

Defining Generative AI

Generative AI is a subset of artificial intelligence that uses machine learning models to generate novel content. This content could be in the form of text, images, audio, or even video. The generated content is characterized by the statistical properties of the data the model was trained on.

Generative AI systems use deep learning models, which are capable of learning and improving over time. The models learn from the training data and then generate new data that exhibits similar characteristics to the training data.

Amazon Web Services offers the following definition of the term:

“Generative AI is a type of artificial intelligence that can create new content and ideas, including conversations, stories, images, videos, and music. Like all artificial intelligence, generative AI is powered by machine learning models—very large models that are pre-trained on vast amounts of data and commonly referred to as Foundation Models (FMs).”

Distinguishing Generative AI from Traditional AI

Artificial Intelligence, or AI, has witnessed a rapid evolution, branching into numerous subfields and applications. Two significant categories in this vast domain are Generative AI and Traditional AI. Understanding the distinction between the two can shed light on the diverse capabilities of AI systems.

Core Objective:

- Traditional AI: The main goal of traditional AI systems is to understand, process, and analyze data. Think of them as detectives; they sift through information, find patterns, and then make informed decisions based on what they’ve observed. Their primary concern isn’t to create something new but to draw meaningful conclusions from existing data.

- Generative AI: On the other hand, Generative AI can be likened to artists. While they also understand and learn from data, their main objective is to create or generate new content. They’re not just analyzing the world; they’re adding to it.

Output Type:

- Traditional AI: These systems often produce answers or labels. For example, when given a photo of a cat, a traditional AI model might label it as “cat”. They’re about identifying, categorizing, or making decisions based on known patterns.

- Generative AI: These models generate new instances of data. Using our previous example, instead of labeling a photo of a cat, a generative AI might create an entirely new image of a cat that doesn’t exist in the real world.

Use Cases:

- Traditional AI: Traditional AI models power many of the services we use today, from search engines that categorize and rank web pages, to recommendation systems that suggest the next song or movie you might enjoy.

- Generative AI: Generative AI’s applications are diverse and ever-growing. They can write songs, paint digital art, design virtual worlds, and even draft pieces of writing. They’ve gained popularity in fields like entertainment, design, and research, where creating novel content is of essence.

Training and Learning:

- Traditional AI: These models are typically trained on labeled datasets. They learn by matching input data (like pictures) with the correct output (like labels), refining their understanding over time.

- Generative AI: Generative models learn the underlying patterns and structures in data. They don’t just match inputs to outputs; they internalize the essence of the data to produce something new and original.

In summary, while both Generative AI and Traditional AI have their roots in understanding and processing data, their end goals differ significantly. Traditional AI seeks to understand and categorize the world, while Generative AI aims to contribute to it by creating new, original content.

Understanding Generative AI Basics

Generative AI is like an artist. Instead of paint and brushes, it uses data to create something new. It learns from lots of examples and then tries to make its own. Let’s talk about the tools it uses:

Variational Autoencoders (VAEs):

- Introduction: VAEs are a class of probabilistic generative models that have shown proficiency in generating continuous data. Their design facilitates the learning of complex distributions in an input dataset.

- Architecture: The structure of VAEs pivots around two main components — an encoder and a decoder. The encoder’s task is to digest the input data and translate it into a compact latent space representation, preserving the salient features of the data. Think of this process as a form of data compression, where the essence of the data is encapsulated in a smaller form. Conversely, the decoder takes this latent representation and de-compresses it, striving to reproduce the original input data with minimal loss of fidelity.

- Applications: VAEs find extensive application in image generation, denoising, and anomaly detection, among other tasks.

Generative Adversarial Networks (GANs):

- Introduction: GANs, a brainchild of Ian Goodfellow and his colleagues in 2014, revolutionized the world of generative modeling by introducing an innovative game-theoretic approach.

- Architecture: The architecture of GANs is best described as a tug-of-war between two neural networks — the generator and the discriminator. The generator’s purpose is to craft new, synthesized instances that aim to mimic genuine data. Simultaneously, the discriminator operates as a critique, discerning between genuine and forged instances. As training progresses, this interplay refines both networks — the generator becomes adept at producing increasingly convincing data, and the discriminator sharpens its evaluation skills.

- Applications: GANs are versatile, powering innovations like deepfake creation, art generation, super-resolution, and style transfer, to name a few.

Transformers:

- Introduction: Unveiled by researchers at Google in 2017, the transformer architecture heralded a paradigm shift in sequential data processing, especially in the realm of natural language processing.

- Architecture: Distinct from traditional recurrent models, transformers process input data non-sequentially. They leverage self-attention mechanisms that weigh the importance of different parts of the input data, allowing them to draw global dependencies between data points, irrespective of their distance.

- Applications: The transformer’s prowess in handling textual data has given birth to powerful models like BERT, GPT, and T5. These models dominate tasks like text generation, translation, summarization, and many other NLP tasks.

The Evolution of Generative AI

The journey of Generative AI, much like a seed evolving into a tree, has witnessed several transformative stages. While today’s applications might be seen as miraculous, the technology’s roots date back decades.

Early Beginnings – 1960s:

- The Dawn of Chatbots: One of the earliest examples of generative technology was the chatbot. ELIZA, created by Joseph Weizenbaum at MIT in the mid-1960s, was among the first. Acting as a “Rogerian psychotherapist,” ELIZA could emulate a conversation by rephrasing user input as questions. Although primitive by today’s standards, it marked a beginning for machines that could ‘generate’ responses.

The 1980s and 1990s – Neural Networks:

- Foundations of Deep Learning: During this period, neural networks began gaining attention. They are essentially algorithms modeled after the human brain, aiming to recognize patterns. While not strictly generative at first, they laid the groundwork for more advanced generative models in the future.

2000s – Boltzmann Machines and Autoencoders:

- Boltzmann Machines: These are a type of stochastic recurrent neural network. They can learn and represent complex distributions and have generative aspects. They contributed to the thinking around how machines could create new data resembling the input.

- Autoencoders: Another step in the generative journey, autoencoders, are neural networks used to reproduce input data. Their middle layer compresses the input, and the output layer tries to decompress it, learning efficient data representations in the process. They provided foundational knowledge for more advanced generative models.

2014 – The Game Changer: GANs

- Birth of GANs: Introduced by Ian Goodfellow and his team, Generative Adversarial Networks (GANs) became a landmark in the evolution of generative AI. GANs consist of two neural networks: a generator that creates images and a discriminator that evaluates them. Their adversarial relationship means that as one improves, so does the other, leading to increasingly realistic outputs.

- Realistic Generation: Post-GANs, the capability of AI to generate authentic content skyrocketed. It wasn’t just about mimicking conversations or patterns anymore. Generative AI could now produce images, art, videos, and audio clips that were often indistinguishable from real-world content. Deepfakes, artworks, and even music compositions started emerging, showcasing the technology’s potential.

Post-2014 – Advancements and Concerns:

- Expansion of GANs: After their introduction, various GAN architectures and derivatives were proposed, each enhancing the generative capabilities and finding new applications, from drug discovery to fashion design.

- Ethical Implications: With the power of GANs came concerns. Deepfakes raised alarms about misinformation, privacy, and security. As a result, alongside the technology’s evolution, discussions around responsible AI usage became paramount.

In essence, while Generative AI might seem like a product of the last decade, its journey has been long and storied. What began as simple conversational algorithms in the 1960s has now become a powerhouse of creativity and innovation, albeit with its set of challenges and responsibilities.

How Generative AI Works

The process begins with a prompt that could be in the form of text, image, video, design, or musical notes. AI algorithms then generate new content in response to the input. This could include essays, solutions to problems, or realistic fakes created from pictures or audio of a person.

Generative AI Models

Generative AI models use a combination of AI algorithms to represent and process content. To generate text, natural language processing techniques are used to transform raw characters into sentences, parts of speech, entities, and actions. Images are similarly transformed into visual elements.

The Role of Neural Networks

Neural networks, designed to mimic the way the human brain works, form the basis of most AI and machine learning applications today. The field accelerated when researchers found a way to get neural networks to run in parallel across graphics processing units (GPUs) used in the computer gaming industry.

Popular Generative AI Interfaces

Once an area of interest only for specialists and academics, generative AI is now increasingly available to the general public via a variety of interfaces. These platforms transform intricate algorithms into user-friendly applications, igniting creativity across various fields. Here are some of the remarkable generative AI interfaces that are currently in use:

-

Dall-E

Overview: An evolution of OpenAI’s GPT-3 model, Dall-E has been trained to generate images from text descriptions.

Functionality: Dall-E can create diverse images by associating textual prompts with visual components—ask it for a “two-headed flamingo wearing sunglasses,” and it will generate just that.

Use Cases: Artists and designers have been using Dall-E to generate visual ideas, create inventive illustrations, and even design prototypes.

-

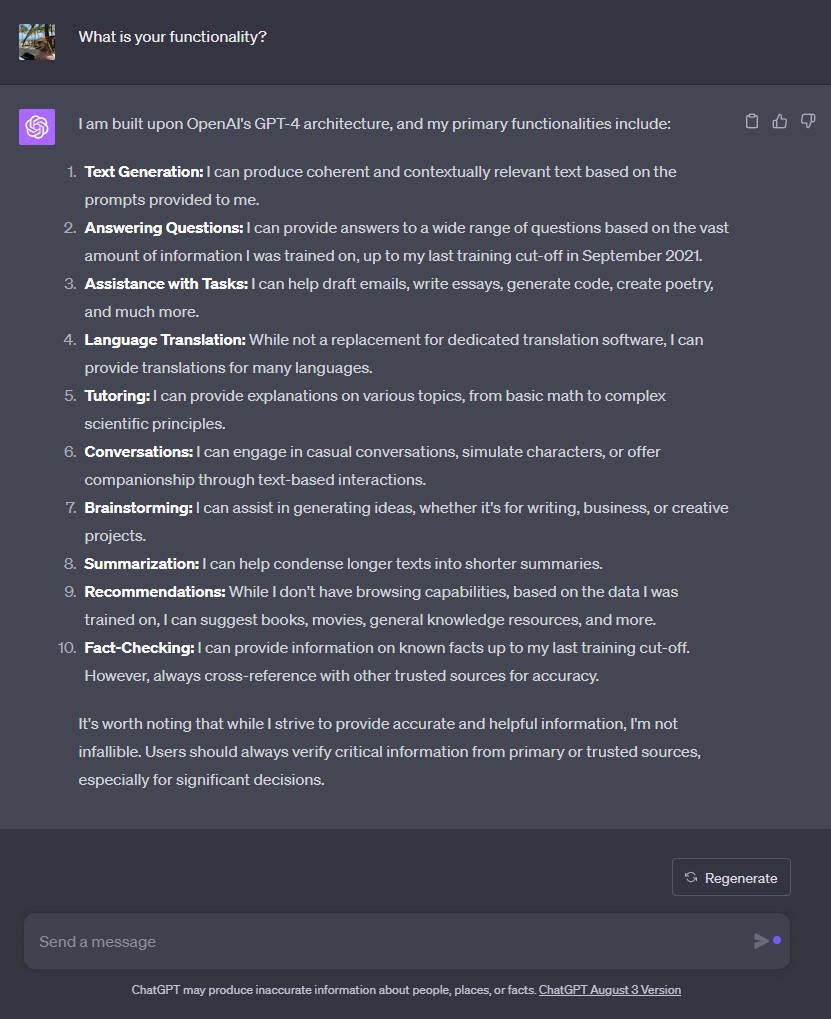

ChatGPT

Overview: An advanced version of OpenAI’s GPT-3.5, ChatGPT is a conversational AI capable of delivering detailed and context-aware dialogues.

Functionality: ChatGPT goes beyond answering questions—it can write essays, compose poetry, simulate characters for video games, and even aid in programming tasks.

Use Cases: ChatGPT has been integrated into chatbots, customer support, content generation, and educational tools to improve user engagement.

-

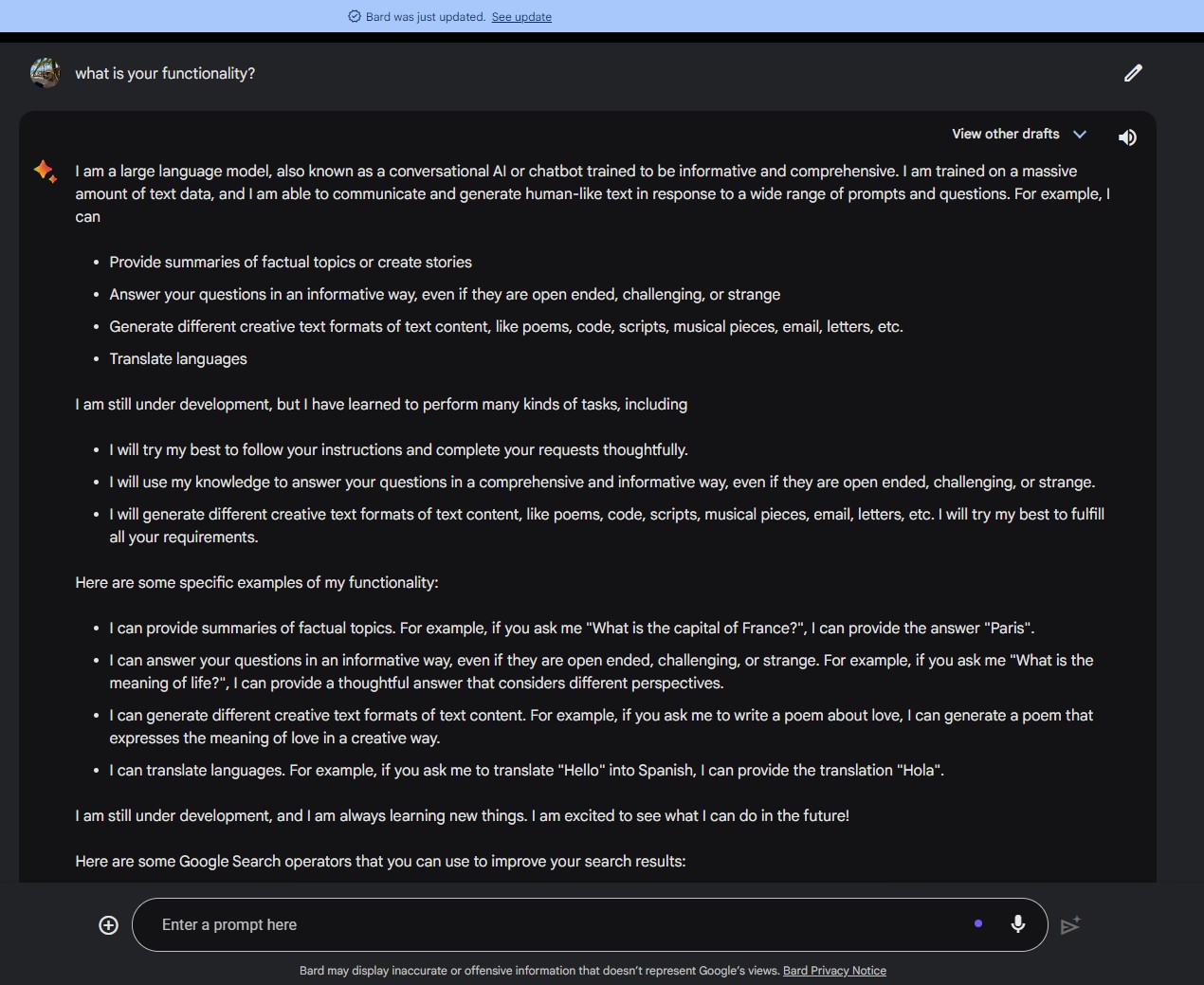

Bard

Overview: Google’s offering, Bard, is built on a simplified version of its expansive language models.

Functionality: Able to communicate and generate human-like text in response to a wide range of prompts and questions.

Use Cases: Bard’s visual emphasis makes it suitable for educational applications, visual storytelling, and data visualization tasks.

Use Cases of Generative AI

Generative AI, with its capability to produce novel content, has been a game-changer across diverse industries. From enhancing customer experience to pioneering scientific breakthroughs, its potential applications seem boundless. Here’s a deeper dive into some of its use cases:

-

Chatbots for Customer Service

Functionality: Using Generative AI, chatbots can produce context-aware, human-like responses to user queries instead of relying on a fixed set of programmed replies. This results in a more fluid and natural conversation experience.

Industries Impacted: Retail, finance, healthcare, and hospitality, among others, have employed advanced chatbots to streamline customer interactions, reduce wait times, and provide 24/7 support.

-

Deepfakes and Media Production

Functionality: Generative AI can create or alter video content to make it appear as though a person is saying or doing something they never did—this is commonly referred to as “deepfakes.”

Industries Impacted: While the entertainment industry can utilize this technology for visual effects, editing, and dubbing, there are ethical concerns, especially when deepfakes are used maliciously in political or social contexts.

-

Photorealistic Art and Design

Functionality: Generative models can produce artwork, designs, or images that are often indistinguishable from those created by humans.

Industries Impacted: Artists, graphic designers, and advertisers can use these AI-generated visuals for campaigns, product design, or digital art installations.

-

Drug Discovery and Design

Functionality: Generative AI can analyze complex molecular structures and predict new potential drug compounds that could be effective in treating diseases.

Industries Impacted: The pharmaceutical and biotechnology sectors can greatly benefit, potentially reducing the time and cost traditionally associated with drug discovery.

-

Designing Physical Products

Functionality: Generative AI can brainstorm and optimize designs based on certain criteria, such as maximizing durability while minimizing material use.

Industries Impacted: Industries like automotive, aerospace, and fashion have leveraged generative design to prototype products that are both efficient and aesthetically pleasing.

-

Music Composition and Sound Design

Functionality: Generative models can create original music or sounds by learning the patterns and structures of existing audio data.

Industries Impacted: Musicians, film producers, and game developers can use AI-generated sounds or compositions to enhance their projects or create unique auditory experiences.

-

Text Generation and Content Creation

Functionality: Generative AI can draft articles, write poetry, or create narratives based on a given prompt.

Industries Impacted: Media, publishing, and education sectors can utilize this technology for generating content, assisting authors, or creating study materials.

-

Gaming and Virtual Worlds

Functionality: Generative algorithms can design game levels, characters, or even entire virtual worlds, offering unique and dynamic gaming experiences.

Industries Impacted: The gaming industry, VR/AR developers, and simulation software creators can leverage generative AI for enhanced user engagement and immersion.

Concerns Surrounding Generative AI

Generative AI’s unprecedented capability to generate new content has been both a marvel and a source of anxiety for many. As with any groundbreaking technology, there are ethical, social, and security concerns. Here’s a deeper exploration of the potential issues:

-

Inaccurate and Misleading Information:

Explanation: Generative AI can inadvertently generate incorrect or misleading information, particularly if trained on flawed datasets.

Implications: Misinformation can be detrimental in contexts like medical advice, financial guidance, or educational content, leading to harmful decisions or misconceptions.

-

Promoting Plagiarism:

Explanation: As AI can produce vast amounts of text, art, or music resembling existing works, there’s a risk of it generating content that inadvertently copies or closely mimics existing copyrighted materials.

Implications: This raises intellectual property concerns and can undermine the value of original creations, potentially leading to legal disputes.

-

Disrupting Existing Business Models:

Explanation: Automated content generation might diminish the demand for human creators in areas like journalism, design, and art.

Implications: Job losses and shifts in various industries might occur, necessitating reskilling and reimagining traditional roles.

-

Generating Fake News and Propaganda:

Explanation: The ability of AI to fabricate believable narratives can be exploited to create fake news stories, doctored videos, or manipulated audio recordings.

Implications: This can skew public opinion, interfere with elections, and escalate societal divides. It makes the discernment of truth increasingly challenging for the general public.

-

Facilitating Cyber Attacks:

Explanation: Generative AI can be used in sophisticated phishing attacks, creating highly personalized and convincing fake emails or messages to deceive recipients.

Implications: Increased susceptibility to breaches, identity theft, and fraud can arise, making cybersecurity a pressing concern.

-

Deepfakes and Identity Misrepresentation:

Explanation: Deepfake technology can create realistic-looking video footage of individuals saying or doing things they never did.

Implications: This can be misused for character assassination, blackmail, or fraud. Victims might find it challenging to disprove such fabricated evidence.

-

Over-reliance on Automated Content:

Explanation: If businesses or individuals over-rely on AI-generated content, there’s a potential loss of human touch, creativity, and context.

Implications: This can lead to homogenized content, devoid of cultural nuances or human empathy, potentially alienating audiences.

-

Ethical Dilemmas:

Explanation: Generative AI might produce content that is offensive, biased, or inappropriate due to biases in its training data.

Implications: Such outputs can perpetuate stereotypes, cause public outrage, and harm the reputation of organizations using the technology.

-

Data Privacy Concerns:

Explanation: Training generative models often requires vast amounts of data. There’s a risk of misuse or unauthorized access to sensitive information.

Implications: Individuals’ privacy might be compromised, leading to trust issues with technology providers.

How Generative AI is Changing Industries

In the healthcare industry, generative AI is being used to create personalized treatment plans, develop new drugs, and improve the accuracy of diagnoses. For example, generative AI can be used to analyze medical images to identify tumors or other abnormalities. It can also be used to generate synthetic data to train machine learning models, which can help to improve the accuracy of diagnoses and treatments.

In the manufacturing industry, generative AI is being used to design new products, optimize production processes, and improve quality control. For example, generative AI can be used to create 3D models of products, which can then be used to simulate how the products would perform in the real world. This can help to identify potential design flaws and improve the overall performance of the product.

In the financial industry, generative AI is being used to create financial models, detect fraud, and personalize investment portfolios. For example, generative AI can be used to analyze historical financial data to identify patterns and trends. This information can then be used to create financial models that can help to predict future market movements.

In the retail industry, generative AI is being used to create personalized recommendations, optimize inventory management, and improve customer service. For example, generative AI can be used to analyze customer purchase history to identify products that they are likely to be interested in. This information can then be used to create personalized recommendations that can help to increase sales.

In the media and entertainment industry, generative AI is being used to create new content, such as images, videos, and music. It can also be used to personalize the user experience, such as by recommending movies or TV shows that the user is likely to enjoy. For example, generative AI can be used to create realistic images of people and objects, which can then be used in movies and TV shows. It can also be used to generate music that is tailored to the user’s individual preferences.

These are just a few of the many ways that generative AI is being used to help people across different industries. As the technology continues to develop, we can expect to see even more innovative and groundbreaking applications of generative AI in the years to come.

Conclusion

Generative AI is a powerful tool that holds immense potential for a variety of industries. However, it’s crucial to understand its complexities, benefits, and challenges to harness its capabilities effectively. As the technology continues to evolve, it is likely to transform the way we generate and interact with content, offering new opportunities for innovation and creativity.