Stephen Hawking, Bill Gates and Elon Musk have been nominated for the embarrassing title of Luddite of the Year by the Information Technology & Innovation Foundation, an American non-profit public policy think tank that focuses on public policies that encourage technology innovation.

It is ironic, and also a bit strange, that an eminent theoretical physicist, the founder of the Microsoft Corporation who is also the world’s richest person, plus the founder of a rocket company and electric car firm – who aren’t exactly technophobes and have done much for the advancement of science and technology – should be put forward as killers of new technology.

The Luddites were 19th-century skilled textile workers – British protesters who did not like the new labour-saving devices that were coming onto the market during the industrial revolution, such as stocking frames, power looms and spinning frames.

Does this cartoon remind you of Stephen Hawking, Elon Musk and Bill Gates?

Does this cartoon remind you of Stephen Hawking, Elon Musk and Bill Gates?

The Luddites were afraid of losing their jobs, and being replaced by badly-paid workers and machines.

The Luddite Movement, which expanded across Northwestern England, became quite vicious, so much so that the Government had to call in the army to suppress it.

Today, the term ‘Luddite’ means a small-minded person who wants to keep things as they are and does not like (even despises) new machines, devices and scientific progress.

According to Oxford Dictionaries, today a Luddite means:

“A person opposed to increased industrialization or new technology, e.g. ‘a small-minded Luddite resisting progress’.”

So, the Information Technology & Innovation Foundation sees Stephen Hawking, Bill Gates and Elon Musk as small-minded technophobes who hate new technology.

They expressed concern about artificial intelligence

Professor Hawking said last year:

“The development of full artificial intelligence could spell the end of the human race.”

He wonders whether artificial intelligence (AI) would take off on its own – re-designing itself at an ever-increasing rate. “Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded,” Prof. Hawking added.

Humans evolve biologically – slowly over hundreds of thousands of years. AI will be upgrading itself at a faster rate than we can evolve. Prof. Hawking wonders what might happen if AI one day becomes smarter than us.

Humans evolve biologically – slowly over hundreds of thousands of years. AI will be upgrading itself at a faster rate than we can evolve. Prof. Hawking wonders what might happen if AI one day becomes smarter than us.

In 2014, in an interview during the AeroAstro Centennial Symposium at MIT, where people were talking about AI, space exploration, the colonisation of Mars, and computer science, Elon Musk said:

“I think we should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it’s probably that. So we need to be very careful. I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish.”

In an interview with the BBC, Bill Gates said:

“I am in the camp that is concerned about super intelligence. First the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well.”

“A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

Elon Musk believes AI should be regulated by a government or international body.

Elon Musk believes AI should be regulated by a government or international body.

Are you a Luddite if you express concern?

The Information Technology & Innovation Foundation (ITIF) cites Hawking, Gates and Musk as potential Luddite champions because they have expressed concern that in the not-so-distant future humans could lose control of AI, thus creating an existential threat.

According an ITIF report, for the past two centuries there has been widespread paranoia about evil machines in popular culture “and these claims continue to grip the popular imagination, in no small part because these apocalyptic ideas are widely represented in books, movies, and music.”

Just last year, we watched several blockbuster movies that portrayed high-tech as a threat, including Ex Machina, Terminator: Genisys, and Avengers: Age of Ultron.

Bill Gates is concerned at what AI will be capable of in a few decades time. He cannot understand why some people are not concerned about this.

Bill Gates is concerned at what AI will be capable of in a few decades time. He cannot understand why some people are not concerned about this.

A Neo-Luddite opposes technological and science advances

ITIF claims it gives the Luddite of the Year award to individuals who aim to “foil technological progress” by opposing advances in tech and science. Is ITIF serious? Does it really view the world’s most famous astrophysicist, one of the major contributors to computer technology worldwide, and a pioneering rocket and battery-car inventor/businessman, as people bent on stopping progress?

The 19th-century Luddites wielded clubs and sledgehammers, Neo-Luddites have a considerably more powerful weapon, ITIF claims – ‘bad ideas’. “For they work to convince policymakers and the public that innovation is the cause, not the solution to some of our biggest social and economic challenges, and therefore something to be thwarted.”

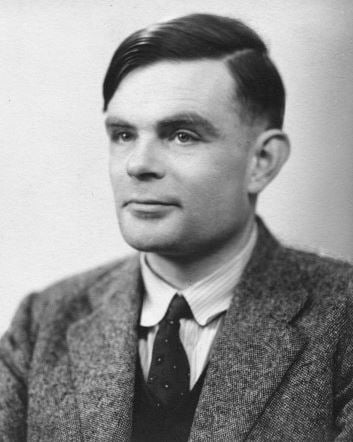

Alan Turing, (1912-1954), a British pioneering computer scientist, mathematician, logician, cryptanalyst and theoretical biologist, considered by many as the father of theoretical computer science and artificial intelligence, said in the 1940s “I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

Alan Turing, (1912-1954), a British pioneering computer scientist, mathematician, logician, cryptanalyst and theoretical biologist, considered by many as the father of theoretical computer science and artificial intelligence, said in the 1940s “I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

ITIF added:

“Indeed, the neo-Luddites have wide-ranging targets, including everything from genetically modified organisms to new Internet apps, artificial intelligence, and even productivity itself. In short, they seek a world that is largely free of risk, innovation, or uncontrolled change.”

ITIF insists that to say that smart devices – those with AI installed – could one day be clever enough to conquer the world is to misunderstand what AI all about and where it stands at the moment.

AI poses no threat this century, ITIF believes

ITIF is doubtful about whether AI will ever have the ability to become completely autonomous, and if it does, it is sure it won’t happen within the next ten years, but rather in 100 or more years’ time.

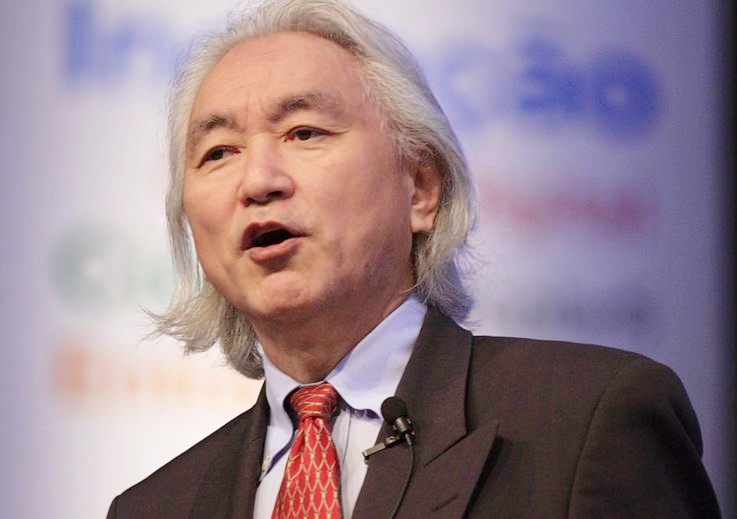

Michio Kaku, an American futurist, theoretical physicist and popularizer of science, a Professor of Theoretical Physics at the City College of New York, once said: “But on the question of whether the robots will eventually take over, he {Rodney A. Brooks} says that this will probably not happen, for a variety of reasons. First, no one is going to accidentally build a robot that wants to rule the world. That is like someone accidentally building a 747 jetliner. Plus, there will be plenty of time to stop this from happening. Before someone builds a ‘super-bad robot’, someone has to build a “mildly bad robot,” and before that a “not-so-bad robot.”

Michio Kaku, an American futurist, theoretical physicist and popularizer of science, a Professor of Theoretical Physics at the City College of New York, once said: “But on the question of whether the robots will eventually take over, he {Rodney A. Brooks} says that this will probably not happen, for a variety of reasons. First, no one is going to accidentally build a robot that wants to rule the world. That is like someone accidentally building a 747 jetliner. Plus, there will be plenty of time to stop this from happening. Before someone builds a ‘super-bad robot’, someone has to build a “mildly bad robot,” and before that a “not-so-bad robot.”

It is premature to be concerned about a Skynet-type scenarios with self-aware AI, the Foundation says.

How can policymakers, scientists and the general public support more funding for AI research if they are being bombarded with scary doomsday sci-fi scenarios, ITIF asks.

A sustained campaign against artificial intelligence means the kiss of death for AI research and development, and also for ways to control it in a responsible way, the Foundation adds.

ITIF wrote:

“What legislator wants to be known as ‘the godfather of the technology that destroyed the human race’? (On the other hand, if we are all dead, then one’s reputation is the last of one’s worries.)”

People wanting to vote should go to this survey page.

What is artificial intelligence? (Super-simple explanation)

Artificial intelligence, known as AI or machine intelligence, was defined by John McCarthy in his 1955 Proposal for the Dartmouth Summer Research Project On Artificial Intelligence, as “making a machine behave in ways that would be called intelligent if a human were so behaving.” Since McCarthy said that, several distinct types of AI have been elucidated. The two main types are strong and weak AI:

Strong AI: computer-based AI that can reason and solve problems. This type of AI is said to be sentient (self-aware). Theoretically, there are two types of strong AI: Human like and Non-Human like.

Weak AI: this is a computer-based intelligence that cannot really reason and solve problems. A machine with weak AI might act as if it were intelligence, but it would not be sentient or posses true intelligence.

BBC Video – What is Artificial Intelligence?