Cambridge researchers have developed two new systems which use deep learning techniques to help autonomous vehicles recognise their location and surroundings.

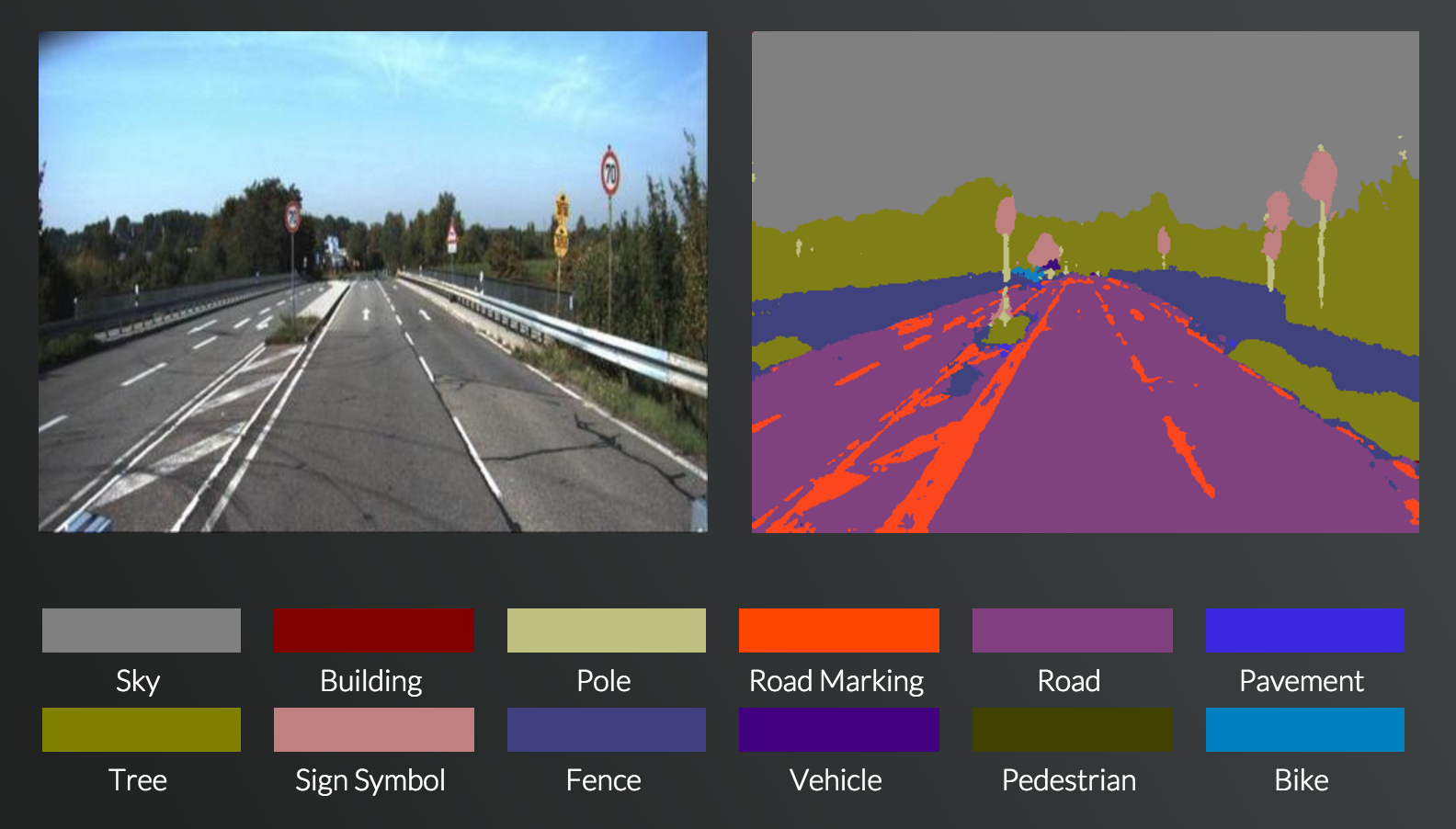

The first system, called SegNet, can take an image of a street scene in real time and classify objects into roads, street signs, buildings, pedestrians, cyclists, and more. It does not use expense laser or radar based sensors, but simply uses images captured from a camera or a smartphone.

The researchers say that the system has been successfully tested on both city roads and motorways.

SegNet, which ’learns by example’, was ‘trained’ by undergraduate students to identify objects after they manually labelled every pixel in a total of 5000 images – each image took about half an hour to complete.

“It’s remarkably good at recognising things in an image, because it’s had so much practice,” said Alex Kendall, a PhD student in the Department of Engineering. “However, there are a million knobs that we can turn to fine-tune the system so that it keeps getting better.”

So far the system has been primarily trained in highway and urban environments, so it “still has some learning to do for rural, snowy or desert environments.”

The picture above demonstrates how SegNet can classify objects from a photo. Source: http://mi.eng.cam.ac.uk/projects/segnet/

SegNet is not yet at a point where it can be used to completely control an autonomous vehicle, but the researchers believe that it could be used as a handy ‘warning system’.

“Vision is our most powerful sense and driverless cars will also need to see,” said Professor Roberto Cipolla, who led the research. “But teaching a machine to see is far more difficult than it sounds.”

Kendall and Cipolla also created a localization system which accurately pinpoints a users location from a single colour image. The researchers say that the system is ‘far more accurate’ than GPS and ‘works in places where GPS does not, such as indoors, in tunnels, or in cities where a reliable GPS signal is not available.’

“Work in the field of artificial intelligence and robotics has really taken off in the past few years,” said Kendall. “But what’s cool about our group is that we’ve developed technology that uses deep learning to determine where you are and what’s around you – this is the first time this has been done using deep learning.”

“In the short term, we’re more likely to see this sort of system on a domestic robot – such as a robotic vacuum cleaner, for instance,” said Cipolla.

“It will take time before drivers can fully trust an autonomous car, but the more effective and accurate we can make these technologies, the closer we are to the widespread adoption of driverless cars and other types of autonomous robotics.”