- OpenAI Launches “o1” Model: The o1 model introduces advanced reasoning capabilities, surpassing previous versions in tasks like math, coding, and science.

- Improved Problem-Solving: o1 uses a chain of thought process to solve complex multi-step problems, simulating human-like reasoning.

- Top-Tier Performance: Achieves high ranks in competitive benchmarks.

- Reinforcement Learning: Trained with reinforcement learning to refine its reasoning over time, leading to better accuracy in scientific and technical fields.

- Early days: The o1 model can’t browse the web or analyze files yet.

OpenAI has unveiled its latest AI model, OpenAI o1, signaling a significant advancement in AI’s ability to reason through complex tasks.

The new model, previously codenamed “Strawberry,” is designed to solve challenging problems across math, science, and coding by mimicking human-like reasoning.

This development comes at a time when competition in the AI space is intensifying, with companies like Google and Anthropic also working on AI systems with similar capabilities.

“As an early model, it doesn’t yet have many of the features that make ChatGPT useful, like browsing the web for information and uploading files and images,” OpenAI said. “But for complex reasoning tasks this is a significant advancement and represents a new level of AI capability. Given this, we are resetting the counter back to 1 and naming this series OpenAI o1.”

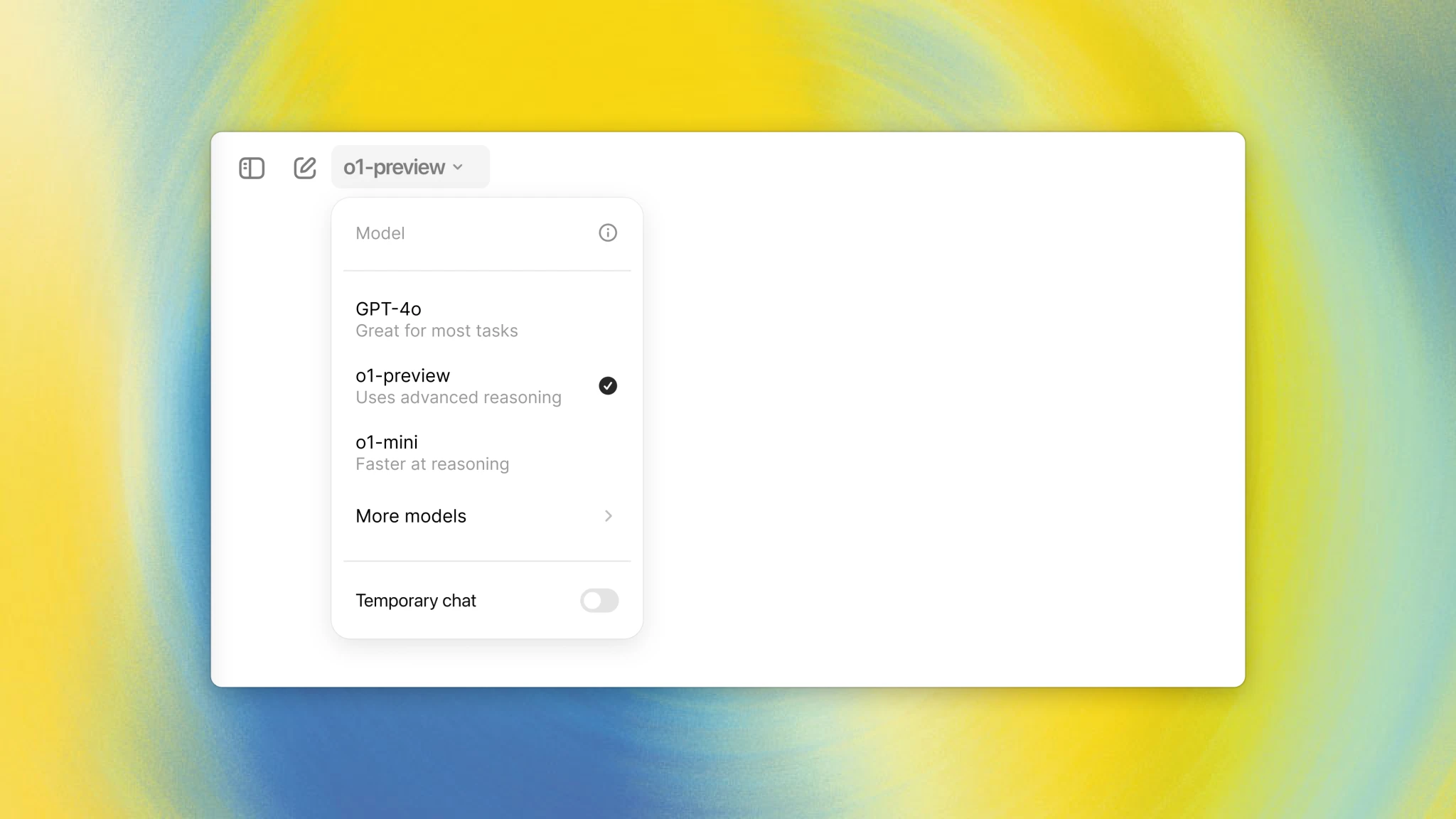

OpenAI also unveiled o1-mini, a “faster, cheaper reasoning model that is particularly effective at coding.”

We’re releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond.

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. https://t.co/peKzzKX1bu

— OpenAI (@OpenAI) September 12, 2024

An Overview of the OpenAI o1 Model

The o1 model is part of a new series of AI systems that OpenAI describes as a major leap forward in reasoning abilities. Unlike earlier versions of ChatGPT, which responded immediately to user queries, the o1 model takes more time to deliberate before delivering its answers. This slower, more deliberate process allows it to solve more complex, multi-step problems, such as advanced math questions or intricate coding tasks.

The model is designed to work through problems in a way that closely resembles human reasoning. For example, it can break down a question into smaller, manageable parts and assess various approaches before choosing the best path to a solution.

OpenAI refers to this as “chain of thought” reasoning, where the model effectively simulates thinking through each step of a problem.

Jakub Pachocki, OpenAI’s chief scientist, emphasized that the o1 model represents a shift from how previous models like GPT-4o worked. “With previous models like ChatGPT, you ask them a question and they immediately start responding,” Pachocki was quoted by The New York Times as saying. “This model can take its time. It can think through the problem — in English — and try to break it down and look for angles in an effort to provide the best answer.”

The o1 model has been positioned by OpenAI as a tool capable of performing tasks that require careful reasoning and logical thinking. This makes it particularly valuable in fields like physics, chemistry, and biology, where multi-step problem-solving is essential. During internal tests, OpenAI demonstrated that the o1 model performed similarly to PhD students on benchmark tasks in these disciplines.

In one particularly striking example, the o1 model was able to solve 83% of the problems in a qualifying exam for the International Mathematics Olympiad (IMO), a vast improvement over the 13% success rate of GPT-4o. The model has also proven adept at coding, reaching the 89th percentile in Codeforces programming competitions, where developers solve algorithmic challenges.

This capability has clear applications for software developers, researchers, and even healthcare professionals. In healthcare, for example, the o1 model could help researchers analyze complex data sets, such as cell sequencing information, or assist doctors by diagnosing illnesses based on patient reports. Similarly, physicists could use the model to generate complicated mathematical formulas, potentially speeding up research in areas like quantum mechanics.

One of the more exciting aspects of o1 is its ability to self-check its reasoning. When given a problem, the model evaluates multiple approaches and fact-checks its answers against the input data, reducing the risk of generating incorrect or misleading information — a known issue with earlier AI systems. This self-correction mechanism marks a significant improvement in AI’s ability to handle tasks where factual accuracy is crucial.

OpenAI o1 codes a video game from a prompt. pic.twitter.com/aBEcehP0j8

— OpenAI (@OpenAI) September 12, 2024

Reinforcement Learning and “Chain of Thought” Reasoning

One of the core advancements in the OpenAI o1 model is its use of reinforcement learning to simulate a “chain of thought” process. This technique allows the model to work through complex problems step-by-step, in a manner similar to how a human would. Instead of simply generating an immediate answer, the model pauses to think through different strategies, testing each one before arriving at a solution. This method has been key to its performance gains in tasks requiring logical reasoning.

OpenAI’s o1 model significantly outperforms its predecessor, GPT-4o, on several reasoning-heavy benchmarks. For instance, it ranked in the 89th percentile in competitive programming tasks on Codeforces, a platform where programmers solve algorithmic problems. In math, o1 placed among the top 500 students in the U.S. in the American Invitational Mathematics Examination (AIME), an exam designed for the brightest high school math students. On science-related benchmarks like the GPQA (General Physics, Biology, and Chemistry Questions), it surpassed human experts with PhDs.

The chain of thought method is particularly effective in tasks where breaking down complex problems into smaller, manageable steps is crucial. For example, in math, o1 was able to solve 74% of AIME problems, compared to GPT-4o’s 12%. With additional consensus among multiple responses, this score increased to 83%, and with further refinement, the model achieved a remarkable 93%.

This structured reasoning process has broader implications for fields like coding, where o1 achieved an impressive Elo rating of 1807 on Codeforces, outperforming 93% of competitors. The model’s ability to simulate human-like reasoning is a breakthrough that enhances its capabilities in diverse domains, from programming to science.

This reinforcement learning-driven reasoning is also tied to improved safety and alignment features. By reasoning through safety guidelines, o1 can more effectively follow safety protocols and avoid generating harmful content, a key improvement over previous models.

Limitations and Challenges

While the o1 model has opened new doors for AI reasoning, it is not without its limitations. One of the primary drawbacks is the time it takes to respond to queries. Because the model evaluates various options before deciding on an answer, it can take longer to generate a response compared to previous models like GPT-4o.

For example, in one instance it took over 7 seconds to provide me with an answer to a question (related to writing this article actually).

This slower performance may be a challenge for applications where speed is critical, although it is a trade-off for the model’s enhanced reasoning ability.

Another significant limitation is that o1 cannot yet browse the web or gather real-time data. It is currently restricted to processing the information provided in a query, without the ability to look up additional facts or context from external sources. This makes it less versatile than earlier models like GPT-4o, which had web browsing capabilities.

Moreover, the o1 model is expensive. In API usage, the cost of o1-preview is significantly higher than GPT-4o, with input tokens priced at $15 per million and output tokens at $60 per million. This makes it a costly option for businesses or developers who rely on high volumes of API calls, potentially limiting its adoption outside of high-budget enterprises or research institutions.

OpenAI has also introduced message limits for users of the o1 model. Subscribers to ChatGPT Plus and Team services are limited to 30 messages per week with o1-preview and 50 messages with o1-mini, a smaller, more efficient version aimed primarily at code generation. These constraints could pose challenges for those looking to integrate the model into more demanding workflows.

Future Directions and Potential

Looking ahead, OpenAI plans to continue refining and expanding the o1 model. The company has hinted at future versions that may be capable of reasoning for extended periods — hours, days, or even weeks — to solve particularly challenging tasks. This could dramatically expand the model’s usefulness in fields like scientific research, where long-term reasoning and analysis are often required.

Additionally, OpenAI strongly suggests that future updates to o1 will see the integration of sought-after capabilities that are currently missing, such as web browsing and file uploading. This would make the model more versatile, allowing it to access real-time data and provide more accurate, context-aware answers.

Competitive Landscape

The launch of the o1 model places OpenAI in a strong position within the competitive landscape of AI development. However, the company is not alone in its quest to build more capable AI systems. Google’s DeepMind, for instance, has been working on similar technologies designed to reason through complex problems. DeepMind recently announced its own breakthroughs in reasoning tasks, such as achieving a silver medal equivalent in a contest similar to the International Mathematics Olympiad.

Other players, including Anthropic and Microsoft, are also focused on developing AI systems that can perform advanced reasoning. Microsoft, which has a close partnership with OpenAI, has already integrated the o1 model into its GitHub Copilot, where it has been used to optimize code and develop more efficient algorithms. Given the intense competition, OpenAI’s ability to rapidly iterate and improve on the o1 model will likely be a key factor in maintaining its lead in the AI space.