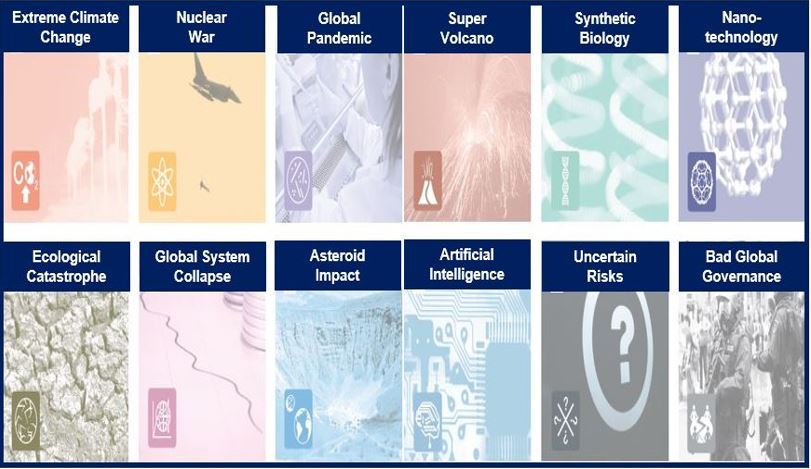

Scientists from the University of Oxford and the Global Challenges Foundation have listed 12 potentially catastrophic global risks that could destroy human life on Earth, ranging from the eruption of a supervolcano, a global pandemic, nuclear war, artificial intelligence, to an asteroid collision.

Several of the possible disasters are beyond our control, such as the impact of a large asteroid or the eruption of a supervolcano. Others, however, including global climate change, artificial intelligence or nanotechnology are not only within our control, but catastrophic events that we could cause.

The team said the study was the first to create a concise list of what they described as global risks with “infinite” impacts.

These are the 12 major risks that could bring about the end of humankind. (Source: Global Challenges Foundation)

The authors wrote:

“The Report is also the first structured overview of key events related to such risks and has tried to provide initial rough quantifications for the probabilities of these impacts.”

They hope their study will inspire dialogue and action, and also trigger increased usage of the methodologies for assessing potential risks.

The authors added:

“The idea that we face a number of global risks threatening the very basis of our civilisation at the beginning of the 21st century is well accepted in the scientific community, and is studied at a number of leading universities. But there is still no coordinated approach to address this group of risks and turn them into opportunities.”

They have separated the risks into four categories: 1. Current. 2. Exogenic (formed/occurring on the Earth’s surface). 3. Emerging. 4. Global policy.

Current risks

Extreme Climate Change: As for all risks, estimates are “uncertain”. Warming could be greater than middle estimates suggest, with global average temperatures rising by 4°C or even 6°C over pre-industrial levels.

“Mass deaths and famines, social collapse and mass migration are certainly possible in this scenario,” the Report states.

Nuclear War: The risk of a full-scale nuclear war between Russia and the West has probably decreased. Even so, there is still the potential for accidental or deliberate conflict. Some estimates suggest there is a 10% risk during the next 100 years.

Ecological Catastrophe: A scenario where the ecosystem suffers a dramatic, possibly permanent reduction in carrying capacity for all organisms, which could result in mass extinction.

Species extinction is now occurring at a faster-than-historic rate.

Global Pandemic: An infectious disease that spreads through human populations across the world. The authors say there are grounds for suspecting that a global pandemic is more probable than usually assumed.

Global System Collapse: A global societal or economic collapse. Economic collapse would be accompanied by civil unrest, social chaos, and a breakdown of law and order. Societal collapse refers to the disintegration of human societies as well as their support systems.

The authors wrote “The world economic and political system is made up of many actors with many objectives and many links between them. Such intricate, interconnected systems are subject to unexpected system-wide failures caused by the structure of the network – even if each component of the network is reliable.”

The risk of the whole system collapsing grows when more and more independent networks rely on each other.

Exogenic Risks

Major Asteroid Collision: About once every 20 million years, a large asteroid (5km or more in size) hits Earth. An object that size would have the energy a hundred thousands times greater than the most devastating bomb ever detonated.

Larger asteroids could collide with our planet. Scientists say the main destruction would probably not come from the initial impact, but from the dust clouds projected into the upper atmosphere, resulting in an “impact winter”.

Supervolcano Eruption: This would involve an eruption with an ejecta volume greater than 1,000 km3, i.e. thousands of times bigger than normal eruptions. The threat to life comes from the amount of aerosols and dust sent into the upper atmosphere, which would absorb the Sun’s rays and cause a global volcanic winter.

About seventy-thousand years ago, the Toba eruption is thought to have cooled the Earth for more than 200 years.

Emerging Risks

Synthetic Biology: This involves designing and making biological devices and systems for useful purposes, but with deliberate attempts at triggering, for example, a pandemic.

Many people warn that new regulations are not being imposed fast enough in this rapidly-growing field of synthetic biology.

One of the most devastating impacts from this risk would come from an engineered pathogen targeting a key component of the ecosystem, or even humans. This could be the result of bioterrorism, military or commercial bio-warfare, or dangerous pathogens accidently leaking from a lab.

Artificial Intelligence: Also called AI, it is a branch of computer science that develops software and machines with human-level intelligence, machines that think for themselves and learn.

Eminent physicist Stephen Hawking fears that AI could eventually spell the destruction of humankind.

Uncertain Risks: Also called “Unknown Consequences”, these represent the unknown unknowns in the family of global catastrophic challenges. While some risks may appear very unlikely in isolation, when combined they represent a significant potential risk.

The authors wrote “One resolution to the Fermi paradox – the apparent absence of alien life in the galaxy – is that intelligent life destroys itself before beginning to expand into the galaxy.”

Global Policy Risk

Also called “Future Bad Global Governance,” there are two divisions: failing to solve solvable problems, and actively causing worse outcomes.

Failing to ease absolute poverty is an example of the first, while constructing a worldwide totalitarian state is an example of the second.

The team members said “Technology, political and social change may enable the construction of new forms of governance, which may be either much better or much worse.”

The authors wrote in the Executive Summary of the Report:

“The idea that we face a number of global challenges threatening the very basis of our civilization at the beginning of the 21st century is well accepted in the scientific community, and is studied at a number of leading universities. But there is still no coordinated approach to address this group of challenges and turn them into opportunities.