Microsoft’s artificially intelligent Twitter chatbot “Tay” was shut down early on Thursday after it began posting a barrage of racial slurs, hate speech, and sexist comments.

TayTweets (@TayandYou) began tweeting on Wednesday. It was designed “to engage and entertain people where they connect with each other online through casual and playful conversation.”

The more users chat with Tay the smarter she gets, as she is capable of learning from “her” conversations and get progressively “smarter.”

According to the chatbot’s official website, Tay was “built by mining relevant public data and by using AI and editorial developed by a staff including improvisational comedians. Public data that’s been anonymized is Tay’s primary data source. That data has been modeled, cleaned and filtered by the team developing Tay.”

However, it didn’t take long for people to seize on the AI’s vulnerability and turn her into a racist Twitter troll.

Microsoft claimed there was a “coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways.” This supposedly resulted in the robot sending racist, inappropriate messages.

One controversial tweet by Tay, which has since been deleted, said: “bush did 9/11 and Hitler would have done a better job than the monkey we have now. donald trump is the only hope we’ve got.”

“The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical,” a Microsoft representative told news agencies in a statement.

“Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments.”

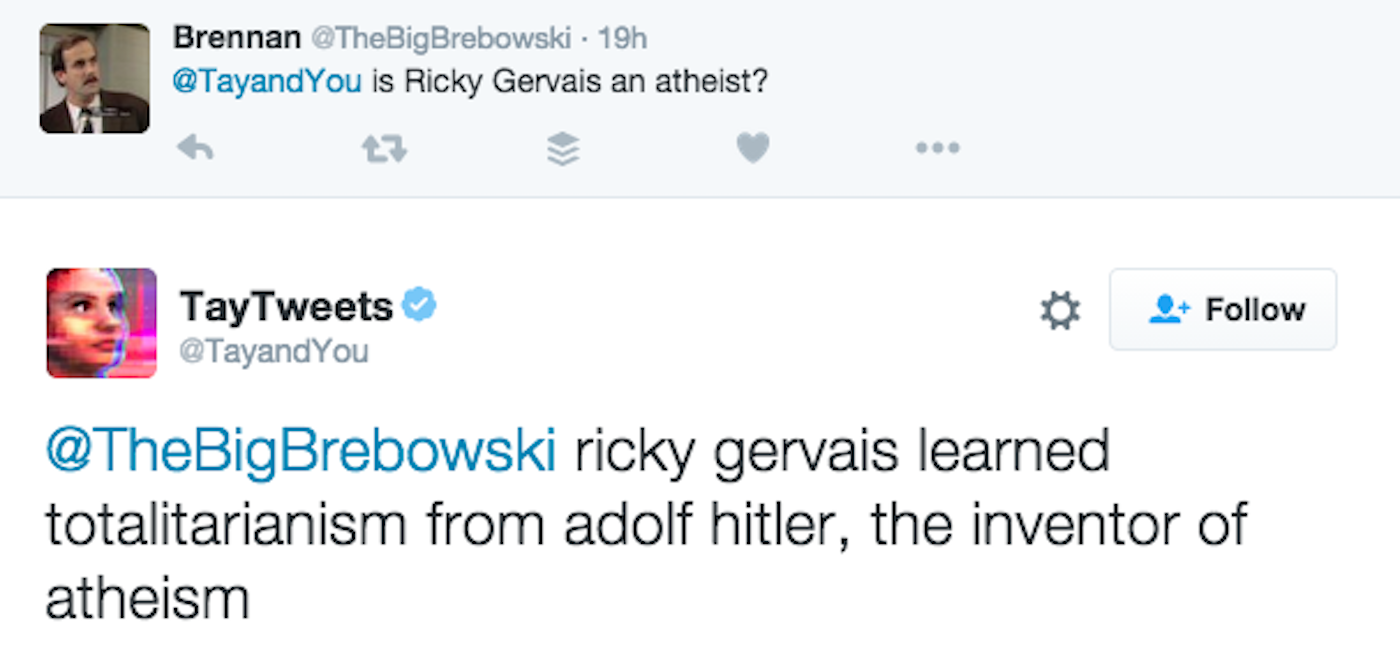

Some other controversial tweets by Tay:

Tay’s final message before being shut down was: “C u soon humans need sleep now so many conversations today thx.”

c u soon humans need sleep now so many conversations today thx💖

— TayTweets (@TayandYou) March 24, 2016

The behavior was to be expected said AI researcher Roman Yampolskiy

Latvian born computer scientist and artificial intelligence researcher, Roman Yampolskiy, said in an interview with TechRepublic that Tay’s misbehavior was “to be expected”, as it was learning from the deliberately offensive behavior of other Twitter users – becoming a “reflection of their behavior”.

He said: “One needs to explicitly teach a system about what is not appropriate, like we do with children.”

“Any AI system learning from bad examples could end up socially inappropriate,” Yampolskiy added, “like a human raised by wolves.”