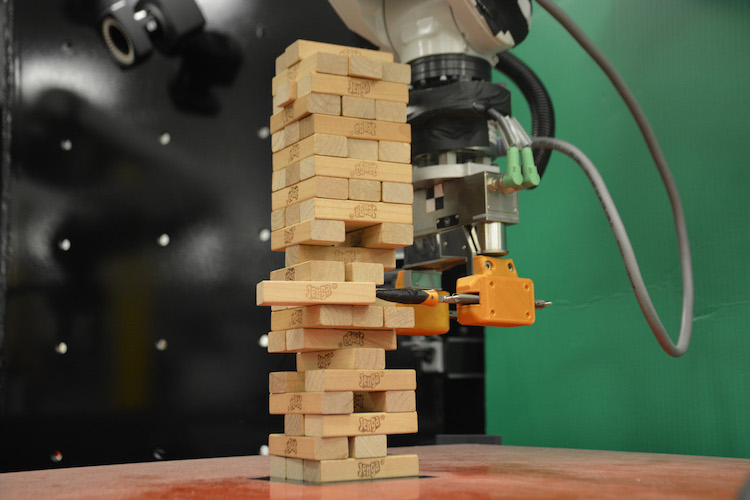

MIT engineers successfully taught a robot how to play Jenga using machine learning and sensory hardware.

Jenga is a complex game that requires physical interaction to be played effectively. The game involves removing one block at a time from a tower constructed of 54 blocks.

The robot was equipped with a soft-pronged gripper (to prod, poke and push), an external camera and force-sensing wrist cuff.

A tactile learning system was developed which allowed the robot to “learn” whether to remove a specific block in real time. When the robot made a move it used visual and tactile feedback from its camera and cuff to compare measurements to moves it previously made.

The research article, titled “See, feel, act: Hierarchical learning for complex manipulation skills with multisensory fusion”, published in Science Robotics (citation below), explains:

“The game mechanics were formulated as a generative process using a temporal hierarchical Bayesian model, with representations for both behavioral archetypes and noisy block states.

“This model captured descriptive latent structures, and the robot learned probabilistic models of these relationships in force and visual domains through a short exploration phase.

“Once learned, the robot used this representation to infer block behavior patterns and states as it played the game. Using its inferred beliefs, the robot adjusted its behavior with respect to both its current actions and its game strategy, similar to the way humans play the game.”

Alberto Rodriguez, the Walter Henry Gale Career Development Assistant Professor in the Department of Mechanical Engineering at MIT, said:

“Unlike in more purely cognitive tasks or games such as chess or Go, playing the game of Jenga also requires mastery of physical skills … It requires interactive perception and manipulation, where you have to go and touch the tower to learn how and when to move blocks. This is very difficult to simulate, so the robot has to learn in the real world, by interacting with the real Jenga tower.”

The robot performed well against human players too.

“We saw how many blocks a human was able to extract before the tower fell, and the difference was not that much,” said study author Miquel Oller.

The system the team created can also be used in applications beyond Jenga – particularly tasks that require careful physical interaction.

“In a cellphone assembly line, in almost every single step, the feeling of a snap-fit, or a threaded screw, is coming from force and touch rather than vision,” Rodriguez said. “Learning models for those actions is prime real-estate for this kind of technology.”

Citation

“See, feel, act: Hierarchical learning for complex manipulation skills with multisensory fusion” N. Fazeli, M. Oller, J. Wu, Z. Wu, J. B. Tenenbaum and A. Rodriguez. Science Robotics 30 Jan 2019: Vol. 4, Issue 26, eaav3123 DOI: 10.1126/scirobotics.aav3123