Robots could improve the quality of life of millions of people worldwide, but they could also become more intelligent than humans and one day make decisions on our behalf that we don’t like. Stephen Hawking and Elon Musk have publicly expressed their concerns about the future of artificial intelligence.

Artificial intelligence has the potential to become a marvelous blessing for us, or our worst nightmare. There is a risk, some experts worry, that AI (artificial intelligence) will one day see us as an obstacle and decide to get rid of us.

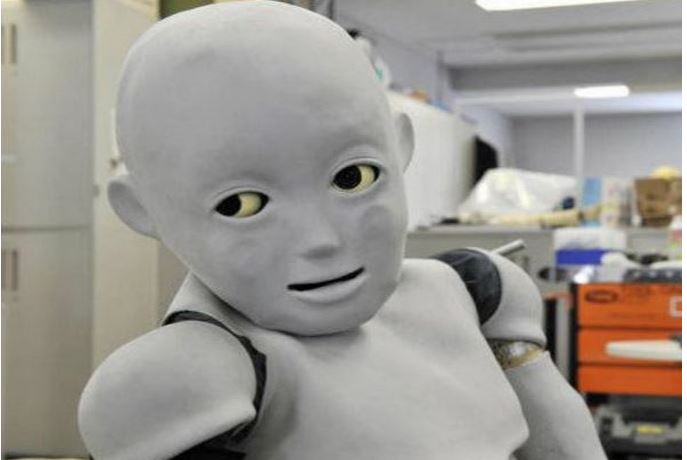

What would a super-smart, logical robot, one considerably more intelligent than we are, think of the way we look after our planet, treat animals, and each other?

When robots become much more intelligent than humans, will they continue being nice to us?

When robots become much more intelligent than humans, will they continue being nice to us?

The Foundation for Responsible Robotics

Rapid development in automation in our everyday lives has prompted twenty-five scholars from across the world to form the Foundation for Responsible Robotics (FRR).

Humankind is on the cusp of a robotics revolution, with corporations and governments looking to robotics and AI as a powerful new economic driver.

Even though automation is disrupting our lives at work, at home and in the streets, only lip-service is being paid to the growing list of potential societal hazards, including the potential for mass unemployment and human rights violations, according to the FRR.

Journalists went to the Science Media Centre to hear the FRR panel highlight several of the issues that will emerge within the next ten years if robotics remains unchecked, and suggest what action can be taken.

FRR co-founder, Prof. Noel Sharkey, Emeritus Professor of Artificial Intelligence and Robotics, University of Sheffield, said the concern is not just about the impact of robots on employment (although that is a worry), or coming up with AI that can make moral decisions, like whether your driverless car should crash into a tree – killing you – to avoid a bus full of children.

Prof. Sharkey said that instead it is about making sure the programming and design of robots is itself ethical. We need to eventually develop “a socially responsible attitude to robotics.”

The FRR says its mission is:

“To promote the responsible design, development, implementation, and policy of robots embedded in our society. Our goal is to influence the future development and application of robotics such that it embeds the standards, methods, principles, capabilities, and policy points, as they relate to the responsible design and deployment of robotic systems.”

“We see both the definition of responsible robotics and the means for achieving it as on-going tasks that will evolve alongside the technology of robotics. Of great significance is that the FRR aims to be proactive and assistive to the robotics industry in a way that allows for the ethical, legal, and societal issues to be incorporated into design, development, and policy.”

FRR Executive Board

The members of the Executive Board of the Foundation for Responsible Robotics are:

Gianmarco Veruggio (Italy): A roboticist. Director of Research at Italian National Research Council and Responsible for the Operational Unit of Genoa of CNR-IEIIT. In 2009 he was awarded the title of Commander of the Order to the Merit of the Italian Republic for his achievements in the field of science and society.

Sherry Turkle (USA): Professor of the Social Studies of Science and Technology in the Program in Science, Technology, and Society at MIT.

Daniel Suarez (USA): Author of the New York Times bestseller Daemon, Freedom, Kill Decision, and Influx. Before writing he was a systems consultant to Fortune 1000 companies and designed and developed mission-critical software for the finance, defense and entertainment industries.

Earlier this year, computer scientist Adam Mason and colleagues led a demonstration in Austin, warning about the dangers of artificial intelligence. (Image: facebook.com/stoptherobotsnow)

Earlier this year, computer scientist Adam Mason and colleagues led a demonstration in Austin, warning about the dangers of artificial intelligence. (Image: facebook.com/stoptherobotsnow)

Deborah Johnson (USA): Professor of Applied Ethics in the Science, Technology, and Society Program in the School of Engineering and Applied Sciences at the University of Virginia.

Jeroen van den Hoven (The Netherlands): Professor of Ethics and Technology at Delft University of Technology. Editor-in-Chief of Ethics and Information Technology.

Patrick Lin (USA): Director of the Ethics + Emerging Sciences Group, California Polytechnic State University, San Luis Obispo. He is also an associate philosophy professor.

Amanda Sharkey (UK): Senior Lecturer (Associate Professor) at Sheffield University. Currently researching human-robot interaction, ethics of robot care, and swarm robotics.

John Sullins: Professor at Sonoma State University. He specializes in the philosophy of science and technology and the philosophical issues of artificial intelligence/robotics.

Peter Asaro (USA): Philosopher of science, technology and media at The New School in New York. He examines AI and robotics as a form of digital media.

Lucy Suchman (UK): Professor of Anthropology of Science and Technology in the Department of Sociology at Lancaster University. Author of Plans and Situated Actions: The Problem of Human-machine Communication (1987, Cambridge University Press).

Wendell Wallach (USA): Consultant, ethicist, and scholar at Yale University’s Interdisciplinary Center for Bioethics.

Ryan Calo (USA): Assistant professor at the University of Washington School of Law and an assistant professor at the Information School.

Missy Cummings (USA): Associate Professor at Duke University and director of Duke’s Humans and Autonomy Laboratory. One of the first female fighter pilots in the US Navy.

Kate Darling: A researchers at MIT. A world-leading expert in Robot Ethics. She investigates social robotics and human-robot interaction.

Vanessa Evers (The Netherlands): Professor of Human Media Interaction and Science Director of the DesignLab at the University of Twente. Carries out research on human interaction and autonomous agents.

Shannon Vallor (USA): Researcher and Associate Professor of Philosophy at Santa Clara University in Silicon Valley. She is also President of the international Society for Philosophy and Technology (SPT).

Robin Murphy (USA): Raytheon Professor of Computer Science and Engineering at Texas A&M. She is also director the Center for Robot-Assisted Search and Rescue and the Center for Emergency Informatics.

Fiorella Operto (Italy): Co-founder of the Scuola di Robotica (School of Robotics) of which she is President. She contributed to the birth of Roboethics in 2004.

Laure Belot (France): A journalist at Le Monde and also an author. She writes mainly about emerging phenomena in society.

Susanne Beck (Germany): A legal scholar at Leibniz University, Hanover. Her research interests include modern technologies, criminal law, medical law, comparative criminal law, white collar crime, and legal philosophy.

Johanna Seibt (Denmark): A professor in the philosophy department at Aarhus University. She introduced the concept of robophilosophy – the philosophy of, for and by social robotics.

Jutta Weber (Germany): Science & technology studies scholar, media theorist, philosopher, as well as professor for media sociology at the University of Paderborn.

Kerstin Dautenhahn (UK): Professor of Artificial Intelligence in the School of Computer Science at University of Hertfordshire. Her main areas of research are artificial life, socially intelligent agents, social robotics and human-robot interaction.

The founders of the Foundation for Responsible Robotics are:

Dr. Aimee van Wynsberghe (The Netherlands): Assistant Professor in Ethics of Technology at the Department of Philosophy, University of Twente.

Noel Sharkey (UK): Emeritus Professor of Robotics and Artificial Intelligence, University of Sheffield. He is also Chair of the NGO: International Committee for Robot Arms Control.

Last week, the University of Cambridge said it was launching a special centre to explore the challenges and opportunities that AI will bring to humankind, thanks to a £10 million donation from the Leverhulme Trust.

Video – Artificial Intelligence

Robots have what computer scientists call ‘AI.’ AI or Artificial Intelligence refers to software programs designed to makes computers, robots, and other devices ‘smart.’ In other words, programmers have designed them to think and behave like us (humans).