Researchers have developed a new model that identifies AI blind spots. Sometimes, what autonomous systems learn from training examples do not match events that are actually occurring in the real world. In other words, AI systems sometimes miss things and make mistakes. Researchers have found a way of detecting those mistakes.

The researchers, from MIT and Microsoft, say that we could use this model to improve safety in AI systems. The model could help, for example, autonomous robots and driverless vehicles function better.

Driverless vehicle AI systems have blind spots

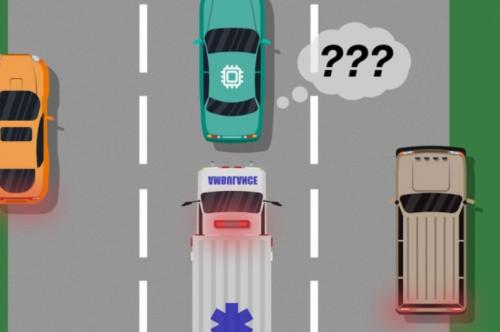

Autonomous vehicles have AI systems that engineers have trained in virtual simulations. The simulations prepare the AI for almost every event that might occur on the road.

However, the systems have blind spots, i.e., they make unexpected errors in the real world. In other words, some events that should alter cars’ behaviors do not alter them.

Let’s imagine, for example, that a driverless car has been trained in a limited simulator. It does not have the sensors that differentiate between different scenarios, such as ambulances with flashing lights on the road and white cars.

If the vehicle is cruising down the road and an ambulance turns on its sirens, the car might not know that it has to slow down and pull over. It doesn’t know because it just sees a big white car.

Detecting training blind spots with a human monitor

In two papers, the researchers described a model that uncovers these training ‘blind spots’ using human input.

As occurs with traditional approaches, the AI system underwent simulation training. However, a human then closely monitored the system’s actions in the real world. The human provided feedback when the system made a mistake or missed something. They also provided feedback when the system was about to make a mistake.

Researchers subsequently combined the human feedback data with the training data. They used machine-learning techniques to produce a model that pinpointed potential ‘blind spots.’ In other words, situations where the AI system would most likely need information about how to act appropriately or correctly.

The researchers used video games to validate their method. During the games, they simulated a human correcting the learned path of an on-screen character.

Their next step will be to incorporate their model with traditional training and testing approaches with human feedback. Specifically, for AI systems in robots and driverless vehicles.

Helping systems better know what they don’t know

First author, Ramya Ramakrishnan, a graduate student in the Computer Science and Artificial Intelligence Laboratory at MIT, said:

“The model helps autonomous systems better know what they don’t know. Many times, when these systems are deployed, their trained simulations don’t match the real-world setting [and] they could make mistakes, such as getting into accidents.”

“The idea is to use humans to bridge that gap between simulation and the real world, in a safe way, so we can reduce some of those errors.”

AI stands for artificial intelligence. It consists of software technology that makes devices such as computers and robots think like us. The technology also makes them behave like us. AI has the ability to learn along the way, just like we do. We call this machine learning.