Humans risk extinction, probably from something we created ourselves, if we don’t colonise other planets first, thus spreading ourselves out in space and reducing our exposure to a mega catastrophe that could wipe us all out, says Professor Stephen Hawking .

There are several human activities and creations that could destroy us, from a nuclear war or accident, climate change, or a genetically-engineered virus.

Who knows what we will invent and create over the next one hundred or one thousand years. We may develop a super-smart artificial intelligence that keeps improving itself (upgrading) rapidly – it could eventually overtakes us, see us as either a threat to the planet or an inconvenience, and decide to destroy us.

Prof. Hawking thinks he is closer to being awarded the Nobel Prize for Physics.

Prof. Hawking thinks he is closer to being awarded the Nobel Prize for Physics.

As we progress scientifically and technologically, Professor Hawking says, so does our risk of self-destruction.

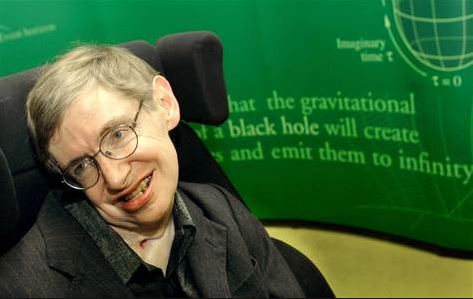

The British cosmologist, theoretical physicist, and author said all this during this year’s BBC Reith Lectures, where he gave a talk. The lectures are given once a year by leading figures of the day.

You can listen to his talk on BBC World Service and BBC Radio 4 on 26th January and 2nd February, 2016.

Black holes may answer questions about the Universe

During his talk, he also explores recent work into the enigmatic black holes. Those places where all matter is believed to collapse under the incredibly-powerful pull of gravity to a point where all the normal laws of physics, as we know them, cease to apply.

He explains that black holes are ‘stranger than anything dreamed up by science fiction writers’.

Prof. Hawking has developed a new theory about black holes that he thinks could provide a method for finding out how the Universe began – its origins. In his theory, black holes have several ‘hairs’ that contain data about their past, potentially solving the age-old question of what happens to matter when it falls into a black hole.

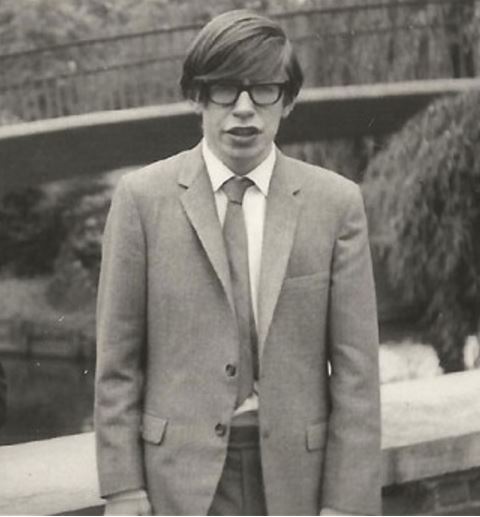

Hawking was 21 when he was diagnosed with motor neurone disease, more commonly known as ALS in the USA – a progressive disease involving degeneration of the motor neurons and wasting of the muscles.

Hawking was 21 when he was diagnosed with motor neurone disease, more commonly known as ALS in the USA – a progressive disease involving degeneration of the motor neurons and wasting of the muscles.

New technologies raise the risk to humans

His gloom and doom predictions came during a Q&A session after his talk when a member of the audience asked whether he thought the world would end naturally or whether man might destroy it first.

Prof. Hawking replied with the following comment:

“We face a number of threats to our survival from nuclear war, catastrophic global warming, and genetically engineered viruses. The number is likely to increase in the future, with the development of new technologies, and new ways things can go wrong.”

“Although the chance of a disaster to planet Earth in a given year may be quite low, it adds up over time, and becomes a near certainty in the next thousand or 10,000 years. By that time, we should have spread out into space, and to other stars, so a disaster on Earth would not mean the end of the human race.”

“However, we will not establish self-sustaining colonies in space for at least the next hundred years, so we have to be very careful in this period.”

Who would have thought that one of the world’s leaders in scientific knowledge would be the one to warn us about the dangers of science.

To live a successful life, a sense of humour is key, Hawking believes.

To live a successful life, a sense of humour is key, Hawking believes.

Prof. Hawking sees the pros and cons of artificial intelligence

Prof. Hawking, along with Microsoft’s founder Bill Gates and the electric car and rocket maker Elon Musk, as well as several computer experts, have over the last couple of years expressed concern about what might eventually happen to us as artificial intelligence becomes more sophisticated.

Over the past century, automation has helped boost efficiency and improve our standard of living. However, with the rate at which artificial intelligence is advancing, there are serious concerns today that automation will eventually make the human labor force superfluous, i.e. we will all be jobless.

In 2014, during an interview with the BBC, Prof. Hawking warned that artificial intelligence could spell the end of humankind.

Prof. Hawking said that the basic forms of artificial intelligence (AI) we have developed so far have been great, and have helped many people, including himself. His artificial speech synthesizer works with AI.

However, he wonders what the consequences could be one day when we create something that is ultra-smart – much cleverer than we are.

Prof. Hawking said:

“It [AI] would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

We will find a way

He stressed that he believes humans will always find a way to cope. “We are not going to stop making progress, or reverse it, so we have to recognise the dangers and control them. I’m an optimist, and I believe we can,” he said.

So, what advice would he give to young scientists across the world today, a member of the audience asked him. He replied that every researcher needs to retain a sense of wonder about our ‘vast and complex’ Universe.

He then went on to talk about himself, and what a life in science has meant for him:

“From my own perspective, it has been a glorious time to be alive and doing research in theoretical physics. There is nothing like the Eureka moment of discovering something that no one knew before.”

In future, scientists, engineers and researchers should become aware of how all the breakthroughs and massive strides we are making in science and technology are changing the world incredibly rapidly. They will also have to make sure the general public is aware of this.

Prof. Hawking added:

“It’s important to ensure that these changes are heading in the right directions. In a democratic society, this means that everyone needs to have a basic understanding of science to make informed decisions about the future.”

“So communicate plainly what you are trying to do in science, and who knows, you might even end up understanding it yourself.”

We must live life with a sense of humour

Prof. Hawking was diagnosed with motor neurone disease at the age of 21. A member of the audience asked him what inspires him to keep going.

His reply was:

“My work and a sense of humour. When I turned 21, my expectations were reduced to zero. You probably know this already because there’s been a movie about it. It was important that I came to appreciate what I did have.”

“Although I was unfortunate to get motor neurone disease, I’ve been very fortunate in almost everything else. I’ve been lucky to work in theoretical physics at a fascinating time, and it is one of the few areas in which my disability is not a serious handicap.”

“It’s also important not to become angry, no matter how difficult life is, because you can lose all hope if you can’t laugh at yourself and life in general.”

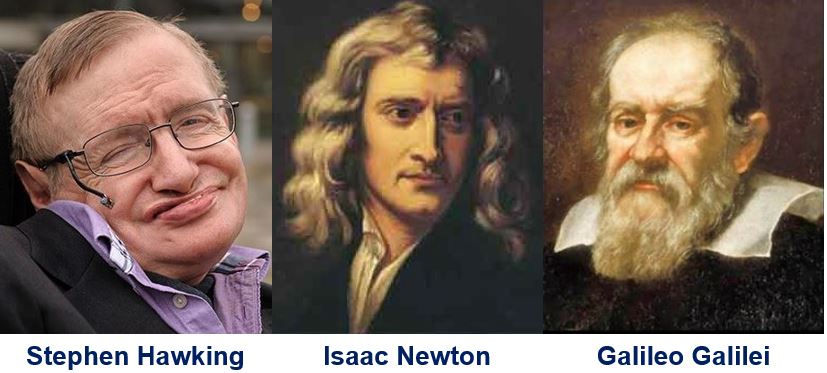

If he had a time machine, Prof. Hawking says his first choice to visit would be Galileo, and not Newton.

If he had a time machine, Prof. Hawking says his first choice to visit would be Galileo, and not Newton.

Hawking feels closer to Galileo than Newton

For thirty years, Prof. Hawking was Lucasian Professor of Mathematics at the University of Cambridge, a position once held by the English physicist and mathematician Isaac Newton (1642-1726). It is one of the most prestigious academic positions in Britain.

However, he feels he has a closer bond with the Italian astronomer physicist, engineer, mathematician, and philosopher Galileo Galilei (1564-1642), who played a key role in the scientific revolution during the Renaissance.

If he could go back in time, Prof. Hawking says he would like to visit Galileo.

Video – Careful we don’t destroy ourselves, warns Hawking

He also said, on the bright side, that we will probably already be colonising other planets when a catastrophe happens on Earth.